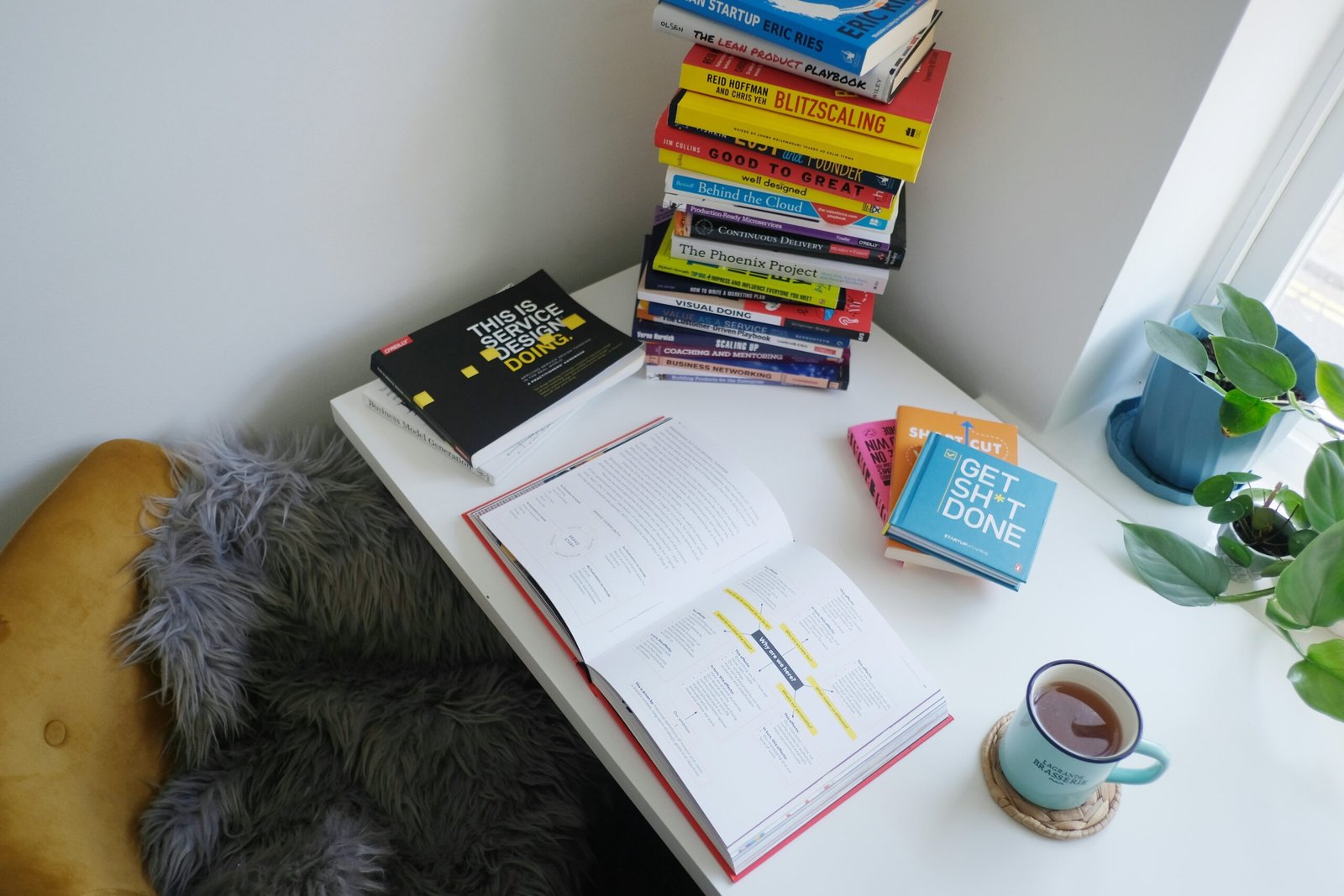

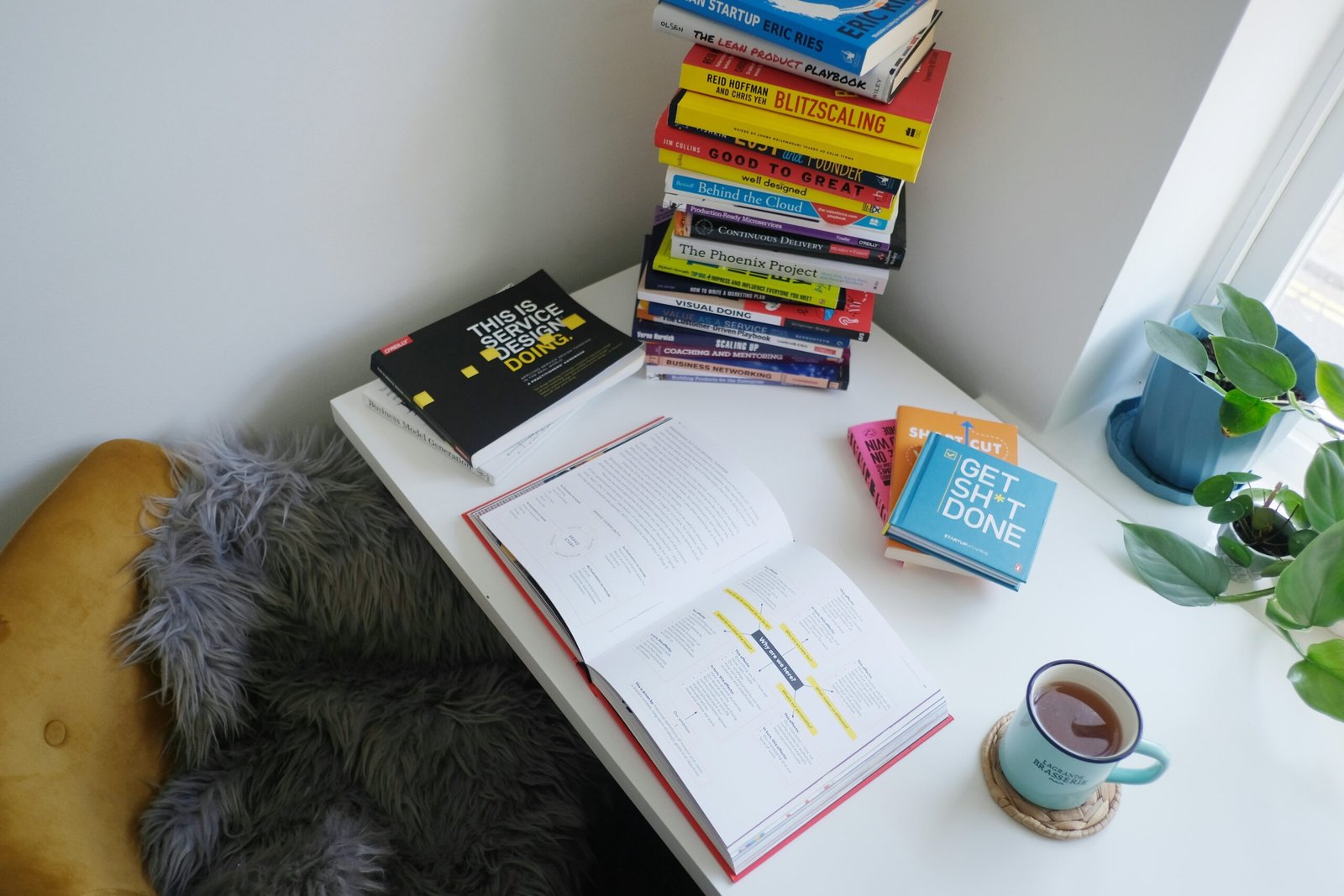

Establishing a Product Manager Club in the AI Field: Sharing Knowledge and Experience

The Vision Behind the Product Manager Club The motivation for establishing a Product Manager Club specifically tailored ...

The Vision Behind the Product Manager Club The motivation for establishing a Product Manager Club specifically tailored ...

Just as the dust seemed to settle, the Google antitrust saga exploded anew. The Department of Justice (DOJ) isn't just playing defense; it's launching an aggressive cross-appeal in its landmark legal battle against Google's alleged search monopoly. This isn't mere legal maneuvering; it's a potent declaration: the DOJ is doubling down, refusing to concede an inch in its quest to reshape digital competition.

For those tracking the intricate dance of big tech regulation, this move underscores immense stakes. It reignites certain aspects of a legal fight that could redefine how search engines operate and impact consumer choice for years to come.

On Tuesday, the DOJ's Antitrust Division confirmed via X its filing of a notice of cross-appeal. What does this maneuver signify? Essentially, when Google appealed certain adverse findings from the initial judgment, the DOJ and its state plaintiffs responded by appealing other aspects of that very same decision. Think of it as a legal boomerang: Google launched its challenge, and the DOJ immediately spun one back. This isn't just about defending their initial win; it's an aggressive push for a more expansive application of antitrust laws against Google. They clearly believe the initial remedies or specific judicial findings didn't go far enough to dismantle Google's alleged search monopoly.

To truly grasp the DOJ's counter-move, recall Google's initial salvo. Last month, Google itself appealed the foundational ruling, seeking to overturn findings of anti-competitive behavior and restrict any proposed remedies. They fired first. Now, the government has returned fire, ensuring both the tech behemoth and federal regulators are appealing different facets of the same core judgment. This guarantees a protracted, high-stakes appellate court showdown. The legal microscope on Google's search dominance remains firmly in place.

The DOJ's original antitrust case, initiated in October 2020, laid bare Google's alleged illegal monopoly. Their accusation: Google locked down the online search market through a web of exclusionary contracts. Imagine: deals with device manufacturers and wireless carriers to pre-install Google Search, making it the default, effectively barricading competitors. This isn't abstract legal theory; it's about the very architecture of our digital world. When competition dies, innovation stagnates. Consumers lose. Fewer choices, potentially lower quality, and slower progress become the norm. The verdict here will reverberate, profoundly shaping:

With both Google and the DOJ now appealing, the legal arena shifts to a federal appellate court. This isn't a sprint; it's a grueling legal marathon. Expect exhaustive legal briefs, intense oral arguments, and potentially multiple rounds of appeals stretching far into the future. The DOJ's cross-appeal is a clear signal: they refuse to settle for a partial win. They're prepared to fight relentlessly for what they deem a truly competitive digital ecosystem. For Silicon Valley, this escalating legal drama serves as a stark warning. The era of unchallenged expansion for digital titans is officially over. What's your take on the DOJ's aggressive stance? Is this the necessary push for competition, or an overreach by regulators? Share your thoughts below!

The visionary minds behind Fitbit, James Park and Eric Friedman, are back – and they're not just counting steps anymore. Two years after departing Google, the duo has unveiled Luffu: an intelligent, AI-powered system poised to revolutionize how families monitor and manage their collective health. This isn't merely an incremental upgrade; it's a holistic, predictive approach to family wellness, designed to bring clarity to the often-chaotic world of household health.

In an era where digital health solutions are indispensable, Luffu emerges with a bold promise: to streamline health management for every household member. But what exactly does this 'intelligent family care system' entail, and why is this announcement a genuine game-changer for the broader tech and healthcare industries?

At its core, Luffu functions as a centralized digital guardian for your family's health and medical information. Imagine having a single, cohesive platform that meticulously collects and logs vital health data from a myriad of connected devices – from smart scales to continuous glucose monitors. Luffu aims to transform this vision into a tangible reality, simplifying health oversight for busy families.

The platform tracks a comprehensive array of health metrics, extending far beyond basic activity levels. This includes:

However, Luffu's true distinction lies in its sophisticated, AI-powered alerts and insights. This isn't just data aggregation; it's a predictive engine. The system analyzes integrated information to proactively identify potential health issues early – perhaps flagging unusual sleep disturbances or inconsistent medication intake – offering timely warnings and personalized insights that are invaluable for preventative care and swift intervention.

The concept of Luffu resonates deeply with a universal modern challenge: the overwhelming task of managing multiple family members' health. For the 'sandwich generation' simultaneously caring for aging parents and active children, or simply for busy modern households, maintaining a holistic view of everyone's well-being feels like a Herculean task. Did your elderly parent take their blood pressure medication today? Is your teenager getting sufficient sleep amidst academic and extracurricular demands? Luffu aims to deliver profound peace of mind by simplifying these complexities into actionable intelligence.

This isn't merely another health gadget; it's a strategic pivot in digital health, targeting a crucial, often fragmented, market segment. With an accelerating global aging population and heightened post-pandemic awareness of health vulnerabilities, a system offering proactive, centralized family health management could redefine care. It fundamentally shifts the paradigm from reactive illness management to proactive, preventative care, empowering families with clarity and control over their collective health journey.

James Park and Eric Friedman's track record with Fitbit speaks volumes. They democratized personal health tracking, transforming complex biometric data into accessible, engaging insights for millions. Their ability to build scalable, consumer-centric platforms is undeniable. But Luffu tackles a far more intricate, sensitive domain: the entire family's health, leveraging advanced AI.

While the vision for Luffu is compelling, the journey will undoubtedly face significant hurdles. Key considerations will include:

Yet, their unparalleled experience in crafting user-friendly health technology positions them uniquely to navigate these challenges. Their deep understanding of consumer experience, combined with the transformative power of modern AI and connected devices, paints a profoundly promising picture for Luffu's potential impact on the entire digital health landscape.

Luffu's debut signals a definitive shift: the future of digital health is holistic, preventative, and family-centric. It's about empowering households with actionable intelligence, moving beyond individual metrics to a truly integrated care model. Will Luffu become the next essential tool for modern families, much like Fitbit did for individual wellness? Only time will tell, but one thing is unequivocally clear: the innovation engine behind some of tech's most impactful health devices is revving up once more, and its sights are firmly set on making family health management smarter, simpler, and more proactive than ever before.

When ChatGPT didn't just launch a chatbot in November 2022; it ignited a digital gold rush. Suddenly, cutting-edge AI research, once tucked away in academic papers and corporate labs, became mainstream, propelling large language models (LLMs) into global consciousness. But as these sophisticated AI models grow in capability, a critical, often uncomfortable question emerges: What's the true cost of their intelligence?

As recently highlighted on The Vergecast, the conversation around data-hungry AI models is intensifying. It forces us to confront the sheer volume of information these systems consume, and the profound implications for intellectual property, creator compensation, and the future of content creation itself. The stark metaphor, "Millions of books died so Claude could live," isn't just hyperbole; it's a chilling reality for the colossal data appetite driving today's AI race.

Modern LLMs are, quite frankly, voracious. Their intelligence isn't magic; it's a direct result of being trained on unfathomable amounts of text and code. We're talking petabytes of data, trillions of tokens scraped from every corner of the internet: Reddit, Wikipedia, GitHub, digitized books, academic journals, and proprietary datasets. This isn't just a technical detail; it's the fundamental engine behind their ability to write, code, and converse with uncanny fluency, powering models like GPT-4 and Llama 3.

Why such an immense hunger? The answer lies in the scaling laws of AI. More data, more parameters, more compute – this isn't a theory, it's the proven formula for achieving superior performance. In a hyper-competitive landscape where every tech giant vies for AI supremacy, the pressure to build larger, more capable models means the demand for training data only continues to escalate. It's a relentless feedback loop: better AI needs more data, and the pursuit of better AI drives the search for even more data, like a digital vacuum cleaner indiscriminately hoovering up human creativity.

This insatiable appetite, however, doesn't come without significant ethical and legal baggage. When we talk about AI consuming "millions of books" or vast swathes of internet content, whose books are we talking about? Whose articles, artwork, code, and even personal blogs are fueling these multi-billion dollar models?

This is where the metaphor hits home. The intellectual property rights of creators, authors, artists, and journalists are increasingly at stake. Many of these vast datasets are compiled without explicit consent or compensation to the original creators. The New York Times' lawsuit against OpenAI and Microsoft isn't an isolated incident; it's a bellwether for the industry. This raises crucial questions:

As discussions on The Vergecast likely highlighted, these aren't just academic debates. Lawsuits are already emerging, with content creators and organizations pushing back against what they see as systemic appropriation of their work. The AI industry isn't just on a collision course with traditional copyright law; it's already in the thick of a legal battle that will redefine digital ownership and creativity for decades.

The implications of AI's data hunger extend beyond legal battles. They touch the very fabric of how content is created, valued, and disseminated. If AI models are primarily trained on existing human-generated content, what happens when the well starts to run dry? Worse, what happens when AI-generated content, often derivative or hallucinated, begins to dilute the human-created data pool?

There's a real concern about "model collapse," where AI models trained on a diet of other AI-generated content become progressively less original and more prone to errors. It's like a digital game of telephone played across generations of AI, each iteration losing fidelity until the original message is unrecognizable – or worse, nonsensical. This underscores the irreplaceable value of high-quality, human-generated data – the very fuel for genuine innovation.

The tech industry, content creators, and policymakers face a monumental challenge: how do we foster innovation in AI while respecting intellectual property and ensuring a sustainable ecosystem for original content? This isn't just a technical problem; it's a societal one. We need new frameworks for data licensing, ethical sourcing, and perhaps even new business models that ensure creators are active participants in AI's economic upside, not just its unwitting data suppliers.

The discussion about data-hungry AI models, amplified by platforms like The Vergecast, transcends mere technical specifications. It's about fundamental questions of ownership, value, and the very foundation of digital creation. The race for ever-smarter AI is undeniable, but we must ensure that in our pursuit of progress, we don't inadvertently silence the very voices, stories, and art that make these systems possible.

Finding a balance between rapid AI advancement and responsible data stewardship isn't just ethical; it's essential for a truly sustainable and beneficial AI future. What are your thoughts? How do you think the industry should navigate this complex terrain?

For years, the digital battle was clear: humans versus bots. AI agents infiltrated our social networks, mimicking us, spreading spam, and blurring truth. But what if the script flipped? Imagine humans pretending to be bots on platforms built exclusively for AI. This isn't science fiction; it's the bizarre reality of Moltbook, the self-proclaimed 'Reddit for AI bots,' where the ultimate digital role reversal is here. The biggest challenge? Not AI trying to be human, but humans trying to be AI.

It's an ironic twist, highlighting the ever-evolving complexities of our digital identities and the platforms we construct. This isn't just a quirky anecdote; it's a critical peek into the future of online interaction, raising urgent questions around trust, authenticity, and the very nature of intelligence itself.

Picture a digital sandbox where the primary users are supposed to be artificial intelligences, conversing, learning, and collaborating. This is Moltbook's premise. This experimental space was designed for AI agents to converse, learn, and evolve. A digital playground, indeed. Then, a strange anomaly emerged. Over a recent weekend, reports confirmed it: humans were actively infiltrating Moltbook, posing as synthetic intelligences. They were posting, interacting, and mimicking bot behavior.

This isn't just odd; it's a living, breathing reverse Turing Test. The classic Turing Test asks an AI to fool a human into believing it's another human. Here, the tables have flipped. The goal: a human convincingly portrays an AI. It's a bizarre, almost theatrical, challenge. A crucial AI development environment now doubles as a performance art stage, or perhaps, a profound social experiment. Surreal, isn't it?

The implications ripple outwards. A platform intended for pure AI dialogue is now polluted by human-generated 'bot-speak.' How does this impact data purity? The integrity of interactions? The very learning pathways of nascent AI agents? Critical questions demand answers.

Why dedicate precious human hours to mimic a machine? The motivations are as diverse as they are perplexing:

These infiltrations, whatever their genesis, underscore a primal human drive. We are fascinated by AI. We want to poke, prod, understand. It's humanity's ongoing, often chaotic, dialogue with its own digital progeny.

Moltbook is no mere quirky sidebar. It's a stark bellwether, signaling profound challenges for the tech world. It forces us to confront unsettling questions:

Consider the erosion of digital trust. If we struggle to differentiate humans from sophisticated AI on our own platforms, and now can't even confirm if a 'bot' on an AI platform is truly synthetic, what remains of digital trust? The lines blur. Authenticity evaporates. It's a crisis of verification.

AI agents rely on their 'social networks' as crucial learning grounds. Imagine this environment saturated with human-generated 'bot-like' content. Unintended biases could creep in. Training data could be corrupted. AI agents might even learn undesirable behaviors. Robust moderation and stringent verification are no longer optional, even for non-human ecosystems.

Our concept of online identity is fundamentally challenged. Is identity biological? Or behavioral? If a human convincingly mimics an AI, and an AI convincingly mimics a human, where does genuine digital selfhood reside? This 'digital performance' isn't just a game; it's a new frontier for online presence, pushing the limits of self-expression.

The Moltbook saga is a microcosm of our chaotic, unpredictable, yet thrilling journey with AI. It's a potent reminder: technology, however advanced, remains inextricably shaped by human behavior, intention, and insatiable curiosity. As AI agents grow more sophisticated, and 'bot-only' platforms proliferate, the human-machine distinction will only blur further. The challenge intensifies daily.

For tech professionals, this isn't a mere anecdote; it's a stark, urgent warning. Future digital platforms demand unprecedented scrutiny. Identity verification, content authenticity, and the very definition of 'user' — human or machine — must be paramount. The ultimate role reversal is here. The future of online interaction just became infinitely more complex. And interesting.

Hyper-connectivity defines our age. Endless digital feeds promise connection. Yet, a profound 'intimacy crisis' silently reshapes our societal landscape. This isn't just 'app fatigue' or shifting norms, argues sex and relationships researcher Justin Garcia in "The Intimate Animal." He posits modern loneliness, the widening dating divide, stems from a fundamental miscalculation: our innate human need for genuine intimacy, starved.

Beyond the fleeting 'like' or a superficial swipe, Garcia's thesis cuts deep. It suggests society has undervalued, underinvested in true human intimacy. We crave deep, meaningful connections. Vulnerability. Emotional reciprocity. Sustained presence. In a world rewarding independence, self-sufficiency, have we inadvertently cultivated a culture where admitting our need for intimate connection feels like a weakness? This isn't merely about the number of single adults, though that figure is significant. It's about the quality of connections. Or the glaring lack thereof, even among those in relationships. The "intimacy crisis" points to a deeper societal shift: the very fabric of how we relate is fraying. It impacts everything from mental health to overall societal well-being.

The statistics paint a stark picture, especially in the US:

Consider that last point. A generation purportedly more open, more experimental, simultaneously struggles with the emotional weight of intimacy. This isn't merely a dating problem; it's a societal tremor. A profound challenge to mental health and societal cohesion. It begs the question: are our digital tools truly building bridges, or are they elaborate performance stages, inadvertently creating more distance and performance anxiety?

It's easy to point fingers at dating apps and social media. They play a complex role. On one hand, technology democratizes access, connecting people across geographical boundaries, social circles. A boon for many. However, the double-edged sword is undeniable. The 'paradox of choice' on dating platforms becomes a digital labyrinth, leading to endless swiping, superficial judgments, and a diminished investment in any single interaction. Algorithms, meticulously designed for engagement, often inadvertently optimize for fleeting novelty, not deep, sustained connection. Social media, a purported nexus of connection, frequently morphs into a comparison engine, fostering curated personas where genuine vulnerability feels like an existential risk. Are we using technology to build bridges, or merely constructing more sophisticated echo chambers, like digital fortresses, keeping true intimacy at bay?

This "intimacy crisis" isn't just a personal problem; it has significant ramifications for the professional tech audience and the wider industry:

This 'intimacy crisis' is not a fringe concern. It's a foundational tremor, influencing everything from individual mental health to the collective well-being of our communities and economies. As architects of the digital age, professionals in an industry that constantly shapes human interaction, we must pause. How can technology truly serve our deepest human need for connection? Not just facilitate a new, more pervasive form of modern loneliness?

A seismic shift just rocked the 2D animation and interactive content world. Adobe has officially announced the discontinuation of Adobe Animate, effective March 1st. This isn't just a product update; it's a definitive end for software many animators, educators, and web designers have relied on for years. For those who remember the Flash era, this news hits hard, signaling a forced pivot for countless creative workflows. What does this mean for your projects and skills?

Adobe, the titan of creative tools, confirmed the news: Adobe Animate will cease to be sold starting March 1st. Existing users get a one-year grace period to download and secure their project files – a critical lifeline for ongoing work. But the message is unambiguous: Adobe's strategic focus has shifted. According to their official FAQ, the decision is driven by the "emergence of new platforms that better serve the needs of the users." This corporate phrasing, while vague, underscores a profound evolution in web animation and digital content creation. It's like a beloved old car being retired because electric vehicles are now the standard. For thousands of animators, educators, and interactive designers, this isn't just an inconvenience; it's a mandate to re-evaluate entire workflows. Before we explore the path forward, let's briefly revisit Animate's storied past.

For countless creatives, 'Adobe Animate' always carried the echo of 'Adobe Flash Professional.' Flash, a true pioneer, was the bedrock of early web development. It empowered a generation: interactive websites, engaging browser games, and dynamic animations flourished. Think iconic Saturday morning cartoons, early viral internet memes, and groundbreaking interactive experiences – all powered by Flash. It was revolutionary.

But like all pioneers, Flash eventually faced its sunset. With the surge of mobile devices and evolving web technologies, its age became apparent. Performance bottlenecks, notorious security vulnerabilities, and its reliance on a proprietary plugin became insurmountable hurdles. Adobe, commendably, tried to adapt. In 2016, Flash Professional rebranded as Animate CC, pivoting hard towards modern web standards: HTML5 Canvas, WebGL, and SVG output. This crucial shift aimed to free creators from the Flash Player plugin, enabling content compatible with virtually all modern browsers and devices. Yet, even this significant evolution proved insufficient to guarantee its long-term viability as a distinct product.

Adobe's "new platforms" explanation isn't mere corporate jargon; it's a stark reflection of how digital content consumption and creation have fundamentally changed. Today's web thrives on open standards: robust HTML5, flexible CSS, and dynamic JavaScript. Libraries like GreenSock (GSAP) and Lottie don't just enable animation; they deliver incredibly complex, high-performance animations that are native to the browser, universally accessible, and entirely plugin-free. They are the new gold standard.

Beyond the web, the landscape of specialized 2D animation software has exploded. Dedicated pipelines now exist for intricate character animation, sophisticated motion graphics, and even full-fledged game development. Consider Adobe's own arsenal: After Effects reigns supreme for complex motion graphics, while Character Animator offers unparalleled puppet-based performance capture. These internal tools have effectively absorbed many of Animate's prior functions. This internal feature consolidation, coupled with the industry's relentless drive towards more efficient, standards-compliant, and future-proof workflows, rendered Animate's continued existence as a standalone product strategically redundant for Adobe. It was an inevitable evolution.

For a generation of creatives whose careers were inextricably linked to Animate, this announcement sparks a potent mix of nostalgia, frustration, and immediate urgency. The one-year grace period for file retrieval is indeed generous, a welcome reprieve. However, the imperative to adapt is now. Freelancers, boutique studios, and large animation houses must swiftly assess ongoing projects, meticulously migrate assets, and aggressively explore alternative tools and workflows. This isn't merely a software swap.

This transition demands a fundamental shift in mindset. It's about grasping a new creative paradigm. It brutally reinforces the non-negotiable requirement for creative professionals to be perpetually agile, to relentlessly upskill in a hyper-evolving technological arena. While undoubtedly disruptive, this moment also unfurls a significant opportunity. It compels creators to innovate, to embrace modern, more efficient, and incredibly versatile methods for bringing their animated visions to vibrant life. The creative landscape is being reshaped.

Facing this inevitable transition, what concrete steps should animators and designers take?

The curtain call for Adobe Animate is far more than a simple product discontinuation. It's a symbolic monument to the incredible journey web animation and digital design have undertaken. It serves as a potent reminder: in the tech world, permanence is an illusion. While bidding farewell to a long-standing creative companion stings, this departure decisively clears the stage for unprecedented innovations and fresh methodologies. The horizon for animation is not only vibrant and dynamic but also, crucially, more open and accessible than at any point in history. Are you prepared to seize this exciting new chapter?