2025会是AI Agent的爆发大年吗?5个热门的AI Agent项目分析

根据Cookie.fun的最新数据,截至12月30日,AI Agent的整体市值已经达到了116.8亿美元,过去7天涨幅高达近39.1%,到发文时,整体市值更是冲到了178.5亿美元。这一波增长势头显示出AI Agent在加密市场中的快速崛...

根据Cookie.fun的最新数据,截至12月30日,AI Agent的整体市值已经达到了116.8亿美元,过去7天涨幅高达近39.1%,到发文时,整体市值更是冲到了178.5亿美元。这一波增长势头显示出AI Agent在加密市场中的快速崛...

2025年伊始,AI 驱动的代理(agent)将拥有更高的自主性来执行更多任务,从而提升人们的生活质量。 AI在记忆与推理能力上的创新,也将助力人类社会寻找应对气候变化、医疗健康等重大挑战的新方法。 在过去的2024年,各界商业领袖和 AI...

作者郎瀚威,现居美国硅谷Palo Alto 2024年是人工智能(AI)领域取得突破性进展的一年。随着技术的不断进化,AI不仅进一步渗透到生产力工具、教育、娱乐等垂直赛道,还推动了全球商业生态和用户行为的深刻变革。本报告基于Similarw...

在AI快速发展的今天,垂直领域AI代理(Vertical AI Agent)正逐渐成为颠覆行业的关键驱动力。这些专注于特定行业或场景的AI解决方案,不仅让传统的SaaS(软件即服务)焕发新生,更为创业者和投资人提供了一个绝佳的机会去创建下一...

大洋彼岸,YC合伙人、资深投资人Jared最新一期深度解读中掷地有声地指出,垂直领域AI Agent有望成为比SaaS大10倍的新兴市场,凭借替代人工操作、提升效率的显著优势,这一领域可能催生出市值超过3000亿美元的科技巨头。 那么,大佬...

引言 在当今快速发展的科技环境中,人工智能(AI)技术已经渗透到我们的日常生活中,尤其是在信息获取和搜索领域。Perplexity.ai作为一款创新的AI搜索引擎,凭借其独特的用户体验和强大的信息处理能力,吸引了广泛的关注。其最新的增长策略...

RAG的基本概念 RAG,全称为“Retrieval-Augmented Generation”,是近年来在人工智能领域脱颖而出的一种技术。它结合了信息检索和文本生成两种能力,旨在提升机器处理自然语言的综合能力。RAG的主要运作机制是通过从...

Introduction to the AI Product Managers Club The AI Product Managers Club has been established to create a dedicated spa...

Adam Mosseri, Instagram's head, recently ignited a tech debate with a post detailing his top concerns for the platform: the surging influence of AI on Instagram. He voiced valid anxieties, a blend of introspection and alarm. Yet, his focus feels misplaced, perhaps even chasing the wrong problem altogether.

Mosseri's public statements reveal genuine apprehension about content safety and authenticity, especially with advanced generative AI. Deepfakes, rampant misinformation, the sheer difficulty of moderating an AI-generated content deluge blurring reality and fabrication – these are his battlegrounds. He calls for better detection tools, clear labeling, and robust policies. Admirable goals, certainly, for any platform navigating the complexities of social media AI. It's a familiar refrain. Acknowledging these challenges publicly is a crucial first step for Instagram's future.

Here's where the professional eyebrow raises. While combating bad actors and synthetic media is vital, are we merely patching symptoms? Mosseri's public gaze fixates on the *generative* aspects of AI – the deepfakes, the synthetic content. But what about the *algorithmic* AI, the silent architect dictating every user's experience, every single day? Instagram's algorithm, powered by advanced AI, is arguably the platform's most influential 'AI'. It decides what you see, when you see it, and even *if* you see it. This invisible hand shapes trends, influences purchasing decisions, and critically, impacts mental health and societal narratives. When we discuss AI concerns, shouldn't this ubiquitous, experience-defining AI take center stage? It's the real power broker.

These are the systemic questions, often overshadowed by the more sensational threats of deepfakes. While the latter are critical for safety, the former are fundamental to the platform's core experience and its long-term societal impact.

The sentiment "Adam Mosseri is just looking for the guy who did this" resonates. It suggests a hunt for a perpetrator, not a deep dive into systemic challenges. The issue isn't just a few rogue actors abusing AI tools; it's AI's pervasive integration into every facet of the platform. Meta, Instagram's parent company, pours billions into AI research and development. This isn't just an external threat; it's an internal revolution. So, when Mosseri raises concerns, one has to wonder: how much of this is about mitigating external risks, and how much is grappling with Meta's own ambitious AI journey? The platform's business model thrives on sophisticated AI to deliver targeted ads and drive engagement. Untangling the "good" AI from the "bad" AI becomes a Gordian knot when the very engine of the platform is AI-driven.

Instead of merely playing whack-a-mole with harmful AI content, perhaps Adam Mosseri and his team should broaden their focus to include:

The conversation around AI on Instagram must evolve beyond content moderation. It's about understanding the profound ways AI is reshaping connection, creativity, and community on one of the world's most influential platforms. Mosseri's concerns are a start, but for Instagram AI to truly thrive responsibly, he needs a wider lens, looking beyond immediate threats to the very fabric of the platform itself. What are your thoughts? Is Instagram's leadership seeing the full picture, or are they missing the algorithmic forest for the deepfake trees?

For years, we've yearned for a Siri that truly understands, moving beyond rigid commands to meaningful conversation. Our wish is finally coming true. Bloomberg's reliable Mark Gurman reports a monumental overhaul: Apple is transforming Siri into a generative AI chatbot, akin to OpenAI's ChatGPT. This isn't just an update; it's a strategic infusion of powerful AI across iPhone, iPad, and Mac, with a rumored launch later this year, likely alongside iOS 18 and macOS Sequoia.

Siri, while groundbreaking in its infancy, has undeniably fallen behind. Its current iteration often feels like a digital dinosaur, rigid, context-deficient, and frankly, a bit behind the times in an era dominated by advanced large language models like Google Gemini and Microsoft Copilot. So, what does this reported transformation mean for millions of Apple users and the broader tech landscape?

The core of this significant upgrade shifts Siri from script-based responses to leveraging cutting-edge generative AI. Imagine a Siri that doesn't just set a timer but comprehends complex, multi-part requests, instantly summarizes dense articles, drafts professional emails, or even helps brainstorm creative ideas, all while seamlessly maintaining context across interactions. This isn't merely about faster responses; it's about profound comprehension and genuinely natural engagement. Think of it as upgrading from a basic calculator to a sophisticated supercomputer.

Integrating a ChatGPT-style AI bot means Siri could potentially become your ultimate digital Swiss Army knife:

This deep integration into iOS and macOS signifies a unified, intelligent experience. The AI won't be an overlay; it will be an intrinsic, indispensable part of how you interact with your entire Apple ecosystem.

Apple's pivot to advanced generative AI for Siri is both long overdue and strategically brilliant. The AI arms race has dramatically intensified, with Google, Microsoft, and numerous startups aggressively pushing boundaries. Apple, renowned for its meticulous, often late-to-market but polished products, can no longer afford its flagship assistant to remain a comparative laggard.

This initiative isn't merely about playing catch-up; it's about reasserting Apple's unparalleled position at the forefront of user experience and innovation. By baking a powerful, on-device AI directly into its hardware and software, Apple aims to leverage its unique ecosystem advantage. This promises enhanced privacy and speed through on-device processing, a key differentiator from many cloud-dependent AI solutions. Imagine your personal AI, not just smart, but also fiercely protective of your data.

While the prospect of a profoundly smarter Siri excites millions, Apple faces monumental challenges. User expectations will be astronomical. The new Siri must be consistently accurate, supremely reliable, and unequivocally respectful of user privacy—principles Apple champions. Balancing powerful, intelligent AI with responsible, ethical implementation will be paramount.

Furthermore, how will Apple truly differentiate its AI chatbot from the already crowded field? The answer undoubtedly lies in its unparalleled, deep ecosystem integration and its unwavering commitment to privacy. Picture Siri not just answering questions but proactively managing your digital life across all your Apple devices—your iPhone, iPad, Mac, Apple Watch, and even HomePod—all while keeping your sensitive data secure, processed on your device whenever feasible. This isn't just an assistant; it's a personalized, privacy-centric digital guardian.

This reported transformation of Siri represents a pivotal moment for Apple. It signals a serious, aggressive commitment to generative AI and acknowledges the seismic shift in human-computer interaction. If Apple delivers on its promise of a truly intelligent, seamlessly integrated AI chatbot, it could redefine how hundreds of millions interact with their devices daily, finally granting us the smart assistant we've always envisioned. Get ready. The wait, it seems, is almost over.

Forget your Apple Watch; a new whisper from Cupertino suggests a paradigm shift. Apple is reportedly developing an AirTag-sized AI wearable – a tiny, unobtrusive pin, equipped with cameras and microphones, designed as an ambient AI companion. This isn't just another gadget; it signals a profound reimagining of conversational AI, wearable tech, and the very essence of ambient computing.

According to The Information, Apple's latest clandestine project centers on an AI-powered wearable pin. Picture it: an "AI pin" roughly the size of an AirTag, encased in a "thin, flat, circular" housing. Its core function? To intelligently perceive a user's surroundings via integrated cameras and microphones. This isn't passive recording; it's about real-time context, deep understanding, and proactive, intuitive assistance. While concrete details remain, as ever with Apple, shrouded in secrecy, the concept points to a device offering instant insights, environment-aware answers, and seamless interaction with your digital life – all without a screen. It's a bold leap, pushing interactions beyond the familiar tap and swipe.

The timing is crucial. Devices like the Humane AI Pin and the Rabbit R1 recently captured headlines, signaling the tech industry's collective pivot towards ambient AI. These intelligent systems aim to integrate seamlessly into daily life, minimizing screen dependence. Is Apple, often a strategic follower but ultimately a market definer, preparing its definitive statement? This isn't merely about competition; it's about leveraging Apple's unparalleled ecosystem. Imagine an AI wearable integrating effortlessly with Siri, Apple Health, HomeKit, and your entire suite of Apple devices. The potential for a truly personal, context-aware AI assistant, always on your person, is immense. This could be Apple's answer to evolving Siri beyond a device-bound voice assistant, transforming it into an omnipresent, far more powerful entity.

An AirTag-sized AI companion, while exhilarating, immediately sparks critical questions, especially concerning privacy. A device constantly analyzing its surroundings – even with on-device processing – will undoubtedly ignite intense public debate. How will Apple navigate data security and user consent when your wearable is perpetually listening and watching? This represents a monumental hurdle, though Apple's established privacy ethos might offer a crucial advantage in public trust. Then, practicality: what indispensable use cases will this device offer that an iPhone or Apple Watch cannot already cover or enhance? Is it for memory augmentation, rapid information retrieval, accessibility features, or an entirely new paradigm of digital interaction? The challenge lies in delivering undeniable value without introducing unnecessary complexity or redundancy. Furthermore, how will this wearable tech integrate into Apple's formidable product lineup? Will it serve as an iPhone companion, a radical alternative, or a bridge to future spatial computing experiences? It's easy to envision it as a natural extension, offloading ambient tasks from the iPhone, liberating users from screen-tethered interactions for quick, intuitive assistance.

This Apple AI wearable remains firmly in the realm of rumor, yet its emergence in the news paints a compelling portrait of Apple's long-term AI vision. It's not just about refining Siri on your phone; it's about embedding intelligence directly into your environment, your personal space. Whether this ambitious project materializes, and in what final form, remains to be seen. But one truth is clear: Apple is deeply invested in shaping the next generation of personal technology. An AirTag-sized AI pin could prove to be one of its most intriguing gambits yet. What are your thoughts? Is this the future you envision, or do the privacy implications loom too large?

ElevenLabs, renowned for its cutting-edge voice AI, just dropped a bombshell into the music industry: "The Eleven Album," a fully AI-generated music album. This isn't merely a tech demonstration. It's a calculated, strategic move. The goal? Showcase AI's potential to amplify human artistry, not replace it. Simultaneously, ElevenLabs aims to navigate the treacherous ethical landscape currently defining AI music. Is this a genuine creative leap or a sophisticated PR maneuver in a fiercely debated domain?

At its heart, "The Eleven Album" serves as ElevenLabs' definitive public demonstration. It’s a bold statement for their nascent music generator. The central tenet is unambiguous: AI is not a replacement. Instead, it’s a potent instrument designed to "expand human artists' creative range while maintaining full autonomy." This is a compelling, even revolutionary, proposition.

Imagine: a digital muse capable of generating intricate melodies, complex harmonies, and dynamic rhythms on demand. Artists can rapidly prototype countless ideas. They can explore uncharted genres. They can craft unique soundscapes that would otherwise demand prohibitive studio time and vast resources. "The Eleven Album" concretely illustrates this potential, showcasing the technology's tangible capabilities.

The concept of AI augmenting human creativity isn't novel; it's already transforming visual arts, literature, and game development. ElevenLabs extends this paradigm to music, positioning AI as an indispensable co-pilot. A tireless muse. An infinitely patient session musician. Consider a songwriter grappling with a difficult bridge section; the AI could instantly generate multiple melodic variations in a specified key and mood. Picture a film composer under immense pressure, needing an evocative atmospheric track within hours, not days.

The underlying promise is transformative: democratizing music creation, dismantling creative blocks, and empowering artists to experiment unbound by conventional constraints like time, budget, or instrumental mastery. This could unlock unprecedented forms of musical expression, reshaping the industry's very foundations.

Yet, any discussion of AI music inevitably confronts its colossal ethical challenges. The industry is currently embroiled in heated disputes: copyright infringement, equitable compensation for artists whose intellectual property trains these AI models, and the fundamental redefinition of authorship itself. From convincing "deepfake" vocals to algorithms meticulously replicating existing artists' styles, the legal and moral terrain is profoundly murky.

ElevenLabs' insistence on artists retaining "full autonomy" directly addresses these anxieties. They meticulously position their technology as augmentative, not substitutive. But a critical question persists: Where exactly is that line drawn? If an AI composes a substantial segment of a track, who holds the copyright? How can we guarantee that original human creators, whose work implicitly informs AI training, receive proper attribution and fair remuneration?

These are not abstract philosophical dilemmas. They are existential questions for the enduring viability of human artistry in the AI era. "The Eleven Album's" role as an ethical touchstone hinges entirely on the transparency and robust protective mechanisms ElevenLabs implements to safeguard artists and uphold equitable practices.

ElevenLabs operates within a fiercely competitive ecosystem. Giants like Google, with its groundbreaking Lyria model, alongside Stability AI and a constellation of innovative startups, all aggressively pursue market share in the burgeoning AI music sector. This unprecedented surge in innovation heralds a seismic shift in how music will be conceived, produced, consumed, and ultimately, defined.

"The Eleven Album" represents a profoundly bold declaration. It's an explicit invitation to engage: to listen critically, to dissect its implications, and to envision future possibilities. It compels us to confront both the uncomfortable realities and the exhilarating prospects AI injects into humanity's most cherished art form.

Ultimately, "The Eleven Album" transcends being merely a collection of tracks. It stands as a pivotal marker in music technology's relentless evolution. While legitimate ethical concerns demand robust, proactive solutions, ElevenLabs consciously steers the discourse toward collaboration and empowerment, away from outright replacement. This is undeniably a precarious tightrope walk.

Music's future is undeniably hybrid. Human creativity will be amplified and accelerated by intelligent algorithms. The paramount challenge, and indeed the immense opportunity, lies in developing these tools with profound responsibility. We must ensure the very soul of music—its intrinsic human connection, its raw emotional depth—remains unequivocally paramount. As tech professionals and ardent music enthusiasts, our collective vigilance is crucial. The symphony of tomorrow is being composed right now, one AI-assisted note at a time.

The future of artificial intelligence isn't just unfolding in labs; it's being aggressively shaped in the political arena. Silicon Valley, far from waiting, is already pouring tens of millions into the 2026 midterm elections. This isn't mere lobbying; it's a strategic, proactive campaign to dictate the coming wave of AI regulation. Why such urgency? The stakes are astronomical. This unprecedented early investment will profoundly impact the legislative landscape, future AI policy, and the very fabric of our democratic process.

Why is AI regulation igniting such fierce debate and attracting massive early funding? The battle lines are stark. On one side, a chorus of voices demands robust oversight. Concerns range from algorithmic bias in hiring and lending, widespread job displacement, and egregious data privacy breaches, to the chilling specter of deepfakes and even existential risks posed by unchecked autonomous systems. Regulators and ethicists grapple with a singular goal: ensuring AI develops safely, ethically, and equitably for all.

Conversely, the tech industry champions unbridled innovation. They argue that overly stringent regulations could cripple progress, forcing cutting-edge research offshore and eroding America's global competitive edge. Their vision? A lighter touch, an ecosystem where AI can flourish, unlocking breakthroughs in medicine, climate modeling, and economic growth. This fundamental tension fuels the financial deluge. Silicon Valley isn't merely advocating; it's actively investing to elect candidates who share its vision for a less restrictive AI future. This isn't about dodging minor fines. It’s about forging the foundational governance of AI for generations.

The chosen engine for this influence campaign? Super PACs. These independent political action committees are financial behemoths, capable of raising and spending unlimited sums to support or oppose candidates. Crucially, they operate outside direct campaign control, yet their impact can be seismic. Think of them as political venture capitalists, investing early and heavily to secure a preferred outcome.

The tech elite's decision to funnel significant funds into these entities two years ahead of the 2026 elections speaks volumes. It’s a long-term play, a deep commitment to pre-emptively shaping a legislative landscape favorable to their interests when AI inevitably faces congressional scrutiny. This isn't just traditional lobbying—a direct plea to existing lawmakers. This is an "upstream investment," akin to diverting a river's course at its source. By influencing who gets elected, these tech titans ensure a friendly ear, or better yet, a like-minded voice, will already reside in legislative chambers nationwide.

This early financial deluge guarantees tangible shifts. Candidates vying for seats in the 2026 midterms will face intense pressure to articulate clear stances on AI. Gone are the days of AI being a niche, tech-insider topic. It's now a mainstream policy debate, capable of swaying voter sentiment and unlocking substantial campaign war chests. Expect detailed position papers, town halls dedicated to AI ethics, and even primary debates centered on technological governance.

A clear ideological chasm is emerging. Will candidates align with the "innovation-first" gospel, prioritizing speed and development? Or will they champion the "regulation-first" mandate, emphasizing safety and oversight? This proactive funding ensures AI policy won't be relegated to an afterthought. It will become a core pillar of numerous campaigns, defining candidates and potentially entire party platforms. The battle lines for AI regulation are being drawn now, long before most voters even glance at their calendars. This underscores the tech industry's formidable political sophistication. They understand influence. They wield it effectively.

This isn't an anomaly. This is a potent chapter in the ongoing saga of the tech industry flexing its formidable political muscles. From intense antitrust battles to complex data privacy legislation, Silicon Valley has steadily amplified its voice in Washington D.C. and state capitals nationwide. The scale of investment targeting the 2026 midterms, specifically for AI regulation, reveals the technology's perceived pivotal role—economically, socially, and politically.

We are witnessing a paradigm shift. Tech giants are no longer content merely being market leaders. They are now indispensable architects of public policy, actively steering the nation's direction. This pre-emptive electoral spending mirrors historical patterns of other powerful industries—oil, pharmaceuticals, finance—seeking to embed their interests deep within the legislative process. As 2026 approaches, scrutinizing these financial flows and the candidates they empower becomes paramount. The future of AI, a profound segment of our economy and society, hangs in the balance, potentially determined by these politically charged contests. Does this aggressive spending constitute necessary advocacy, or does it represent an undeniable, undue influence on our democratic process? Share your perspective below.

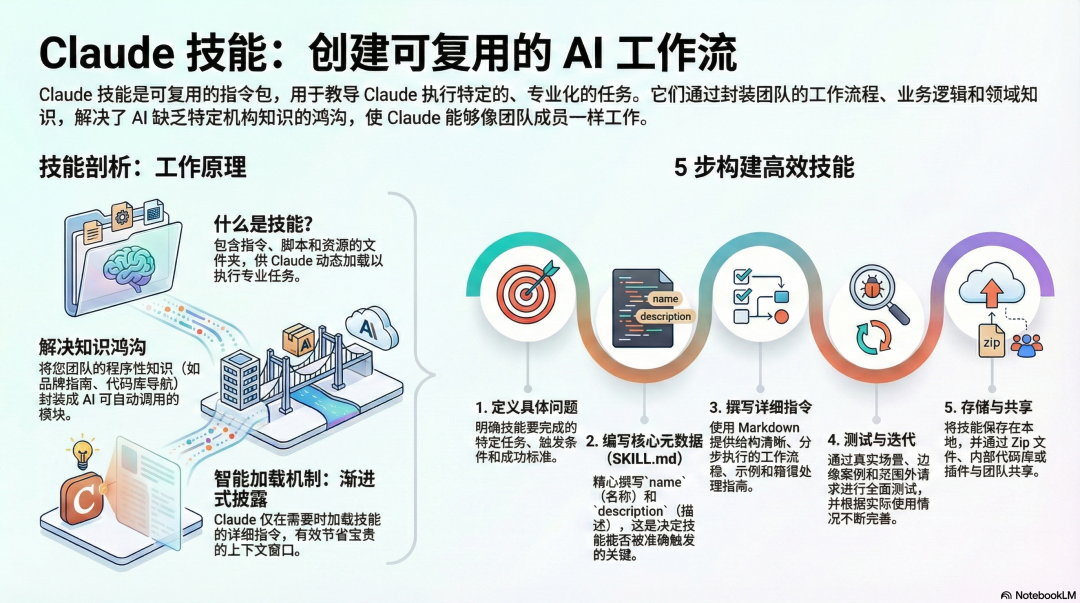

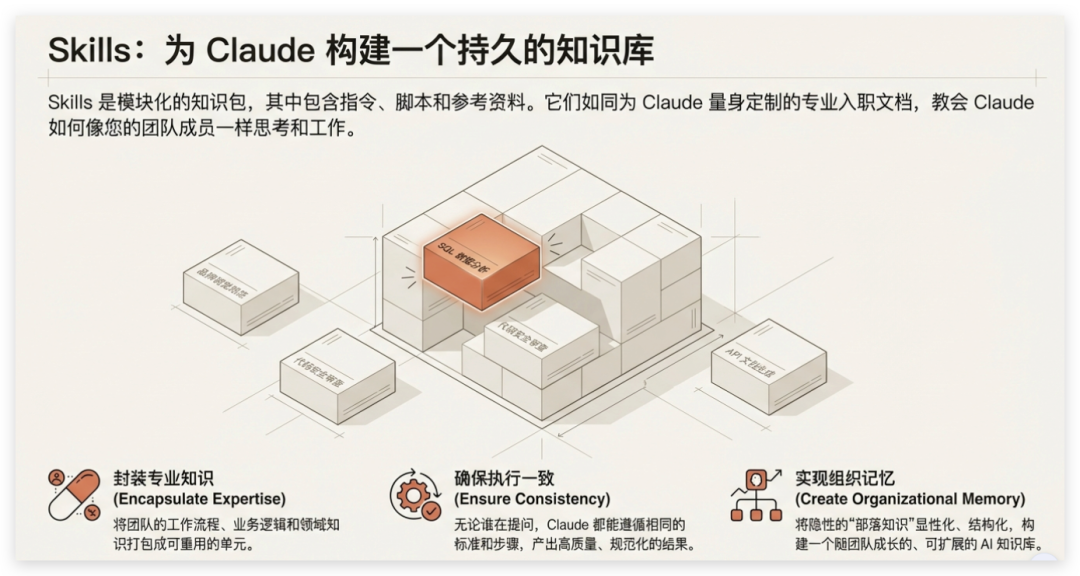

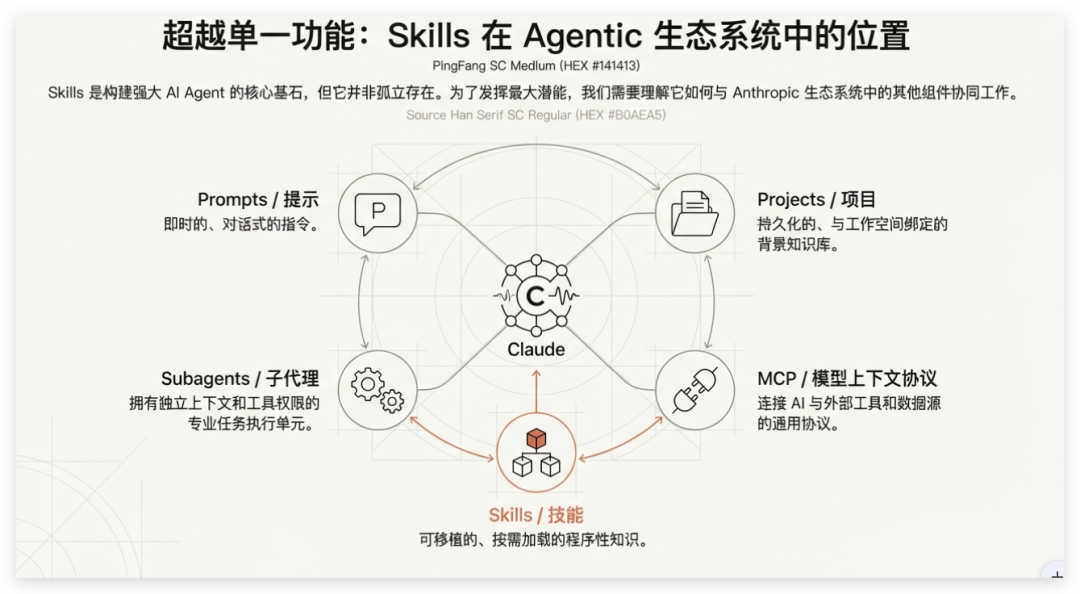

Claude Skills 我的理解就是给大模型的技能工具包,他是可复用的指令包,通过封装团队的工作流程,业务逻辑和领域知识,解决了 AI 缺乏专业知识的鸿沟。

它将特定的程序化知识、业务逻辑和执行脚本打包,让 Agent 能像工作流一样较稳定的执行。

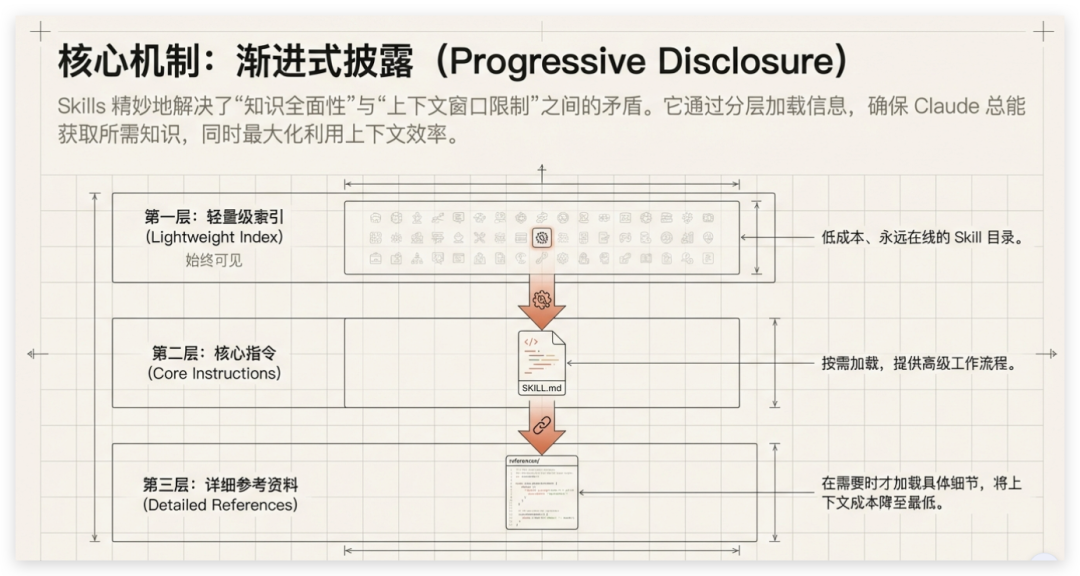

从使用层面上理解 Skills 是包含指令、脚本和资源的文件夹,供 LLM 可以动态加载,底层采用的是渐进式披露,也就是仅在需要的时候加载技能的详细指令,能有效节省宝贵的上下文窗口。

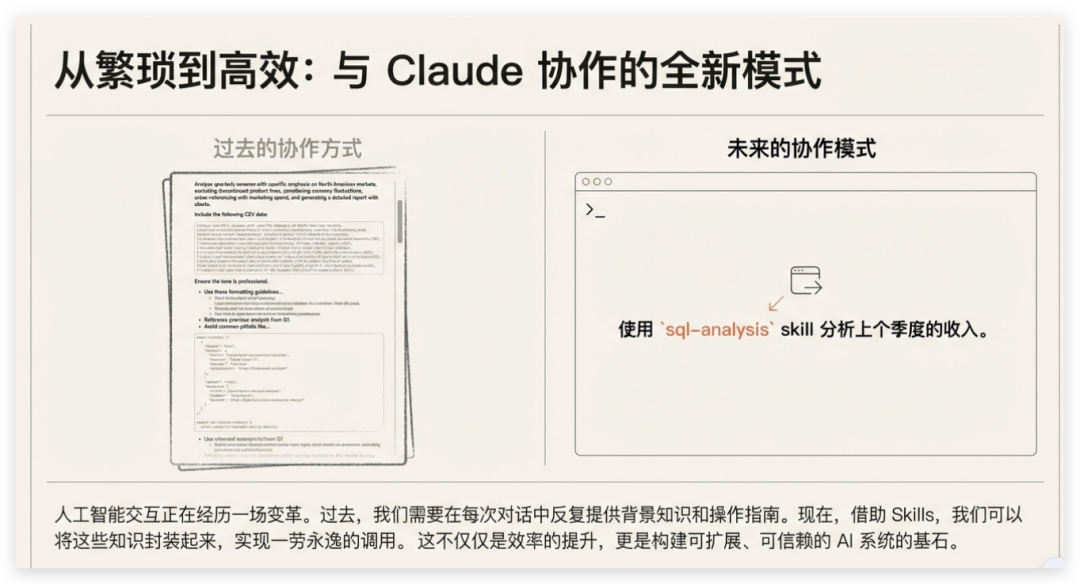

Skills 带来的是一种全新的 AI Agent 协作模式,过去,我们需要在每次对话中提供背景知识和操作指南,现在,借助 Skills,我们可以将这些知识封装起来,给到 AI 使用。

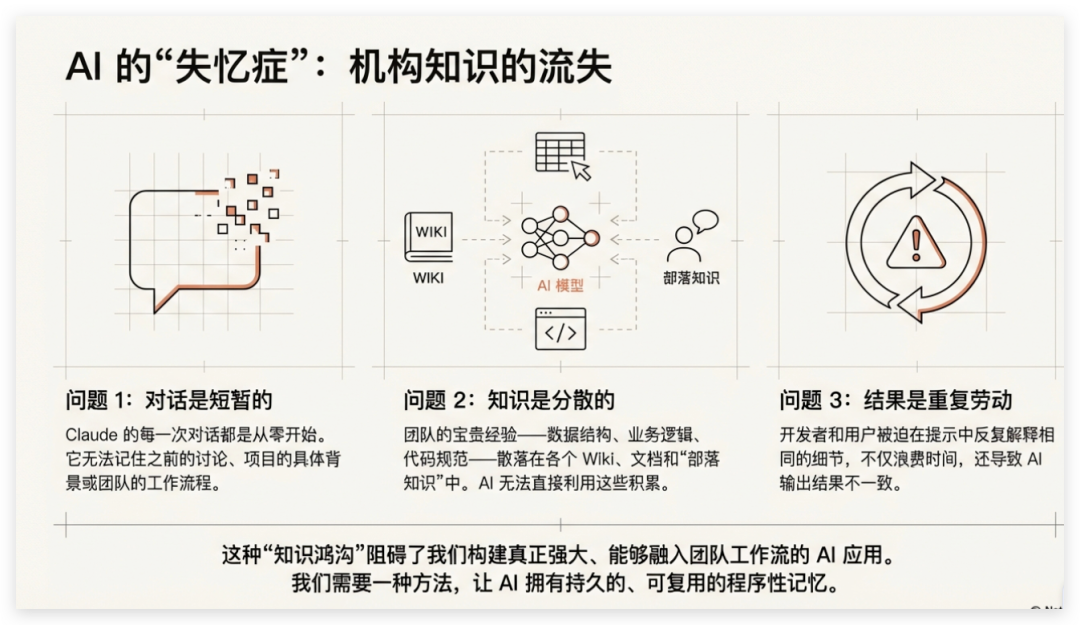

要想很好的理解 Skills ,就得先看清现阶段 AI 面临的一些问题。

1、对话是短暂的:每一次新的 Chat 对话都要从 0 开始,通常无法记住之前讨论,项目的具体背景和团队的工作流程。

2、知识是分散的:团队经验(数据结构、业务逻辑等)分散在 Wiki、文档里,AI 无法直接调用

3、重复劳动多:开发者 / 用户需反复在提示词中解释细节,既耗时又导致 AI 输出不一致

就好比一个新入职的员工,想要快速上手项目就得需要各种知识和技能,Skills 更像是模块化的知识包,给 AI 量身定制。

那 Skills 的核心机制是通过渐进式披露 (Progressive Disclosure),通过分层加载信息,确保 AI 总能获取所需知识,同时最大化利用上下文的效率。

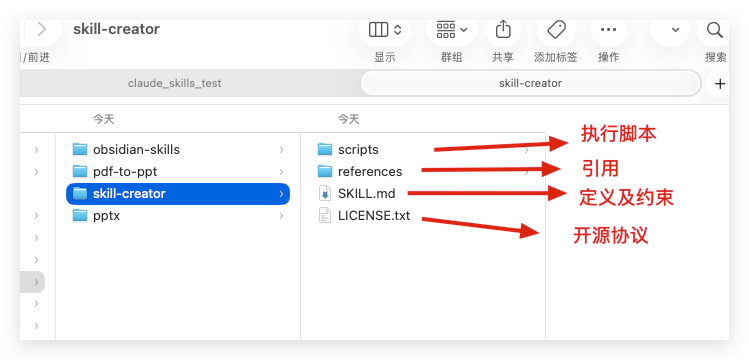

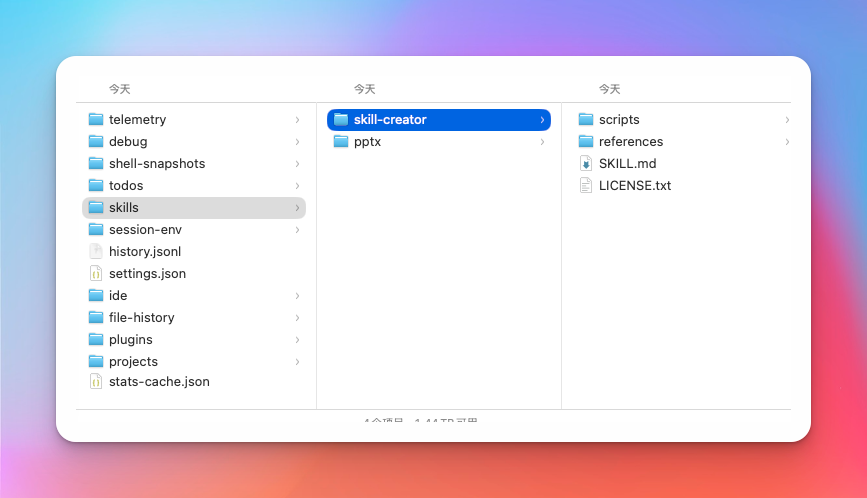

从文件夹层面,来做个剖析吧。

通常会包含这几大部分:

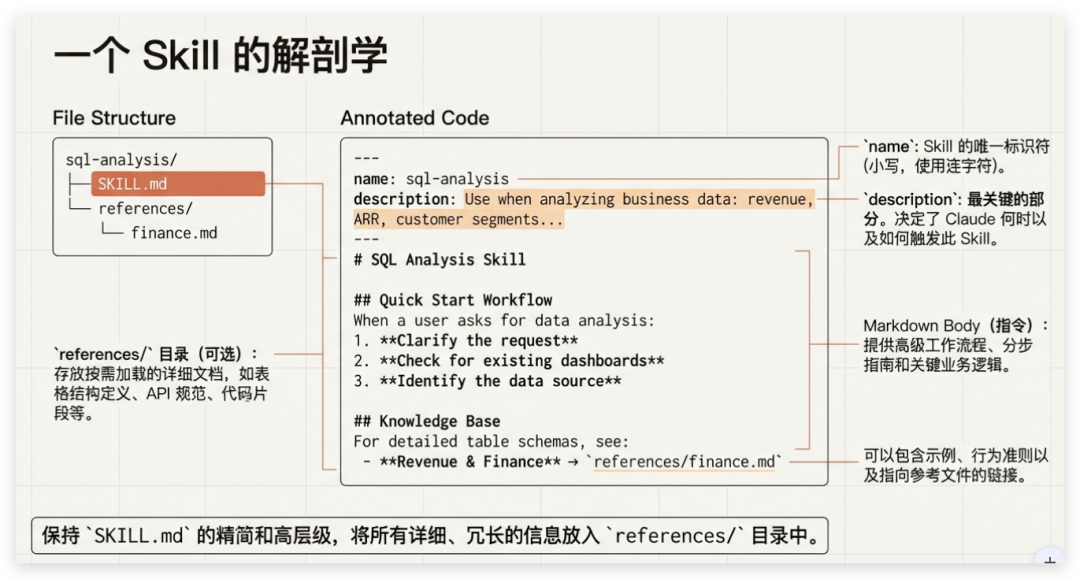

其中 SKILL. md 内部剖析如下:

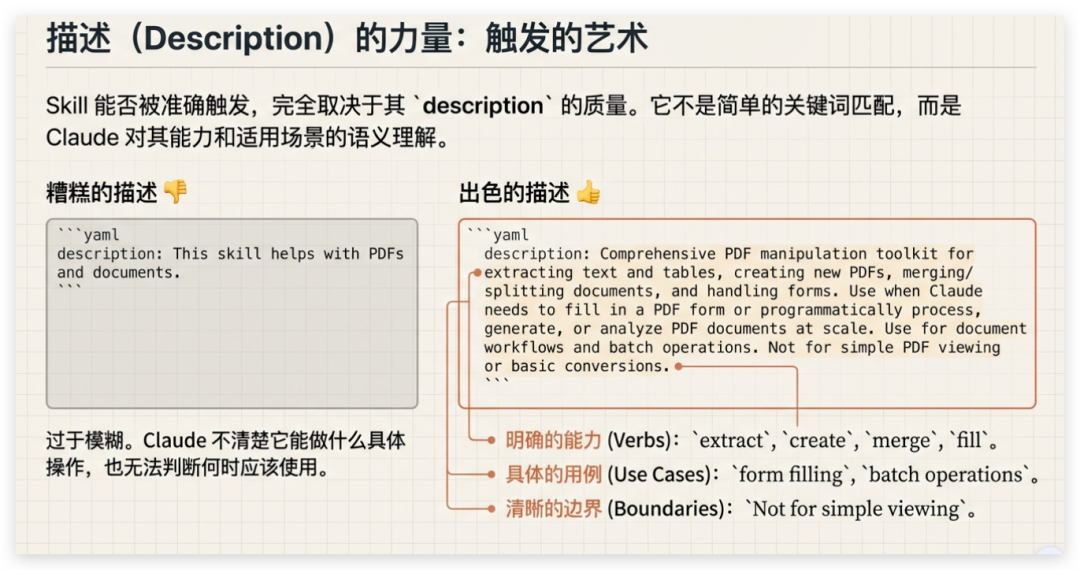

Skill 能否被准确触发,完全取决于其SKILL. md 中 description 的质量。它非简单的关键词匹配,而是 Claude 对其能力和适用场景的语义理解。

Skills 是构建强大 Al Agent 的核心基石,但它并非孤立存在。为了发挥最大潜能,我们需要理解它如何与 Anthropic 生态系统中的其他组件协同工作。

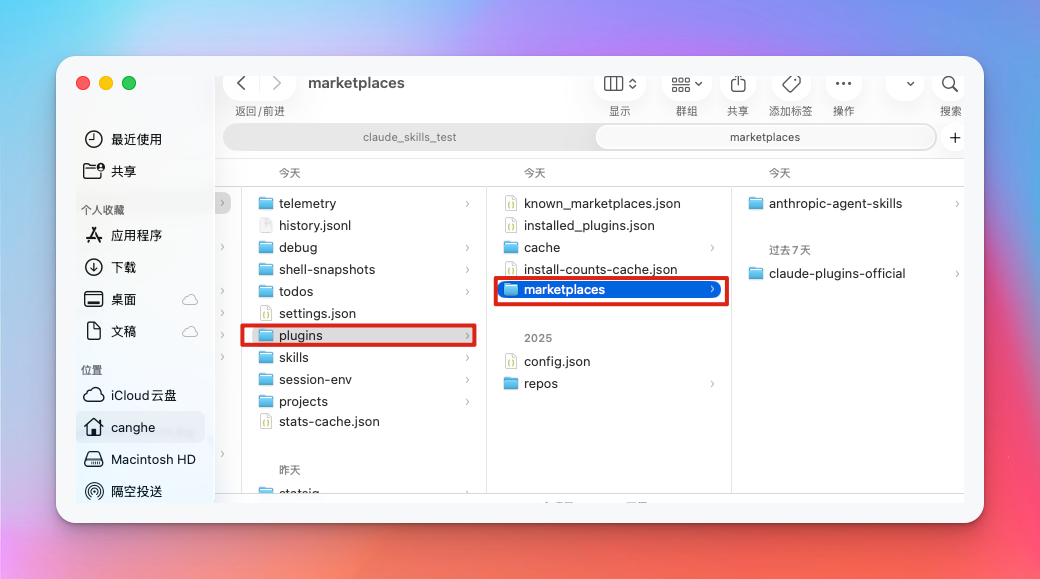

通过插件安装的 skills 需要在 .claude/plugins/marketplaces/ 这个目录下查看:

或者也可以直接输入命令安装插件:

/plugin install document-skills@anthropic-agent-skills

当安装好 skills 后,需要重启一下 Claude Code。

要想使用的话,可以直接指定 skill,也可以按照用户意图,Agent 自动选择合适的 skill。

比如输入 prompt:

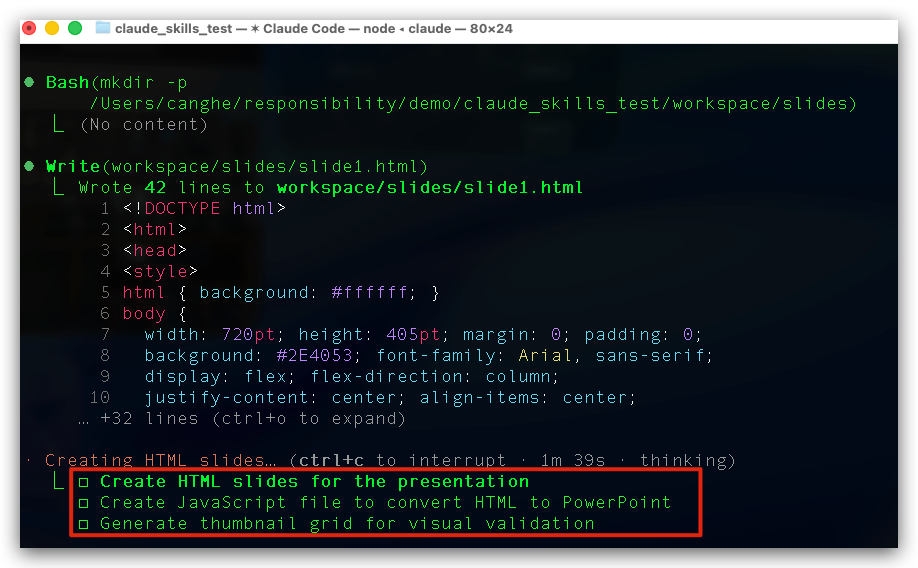

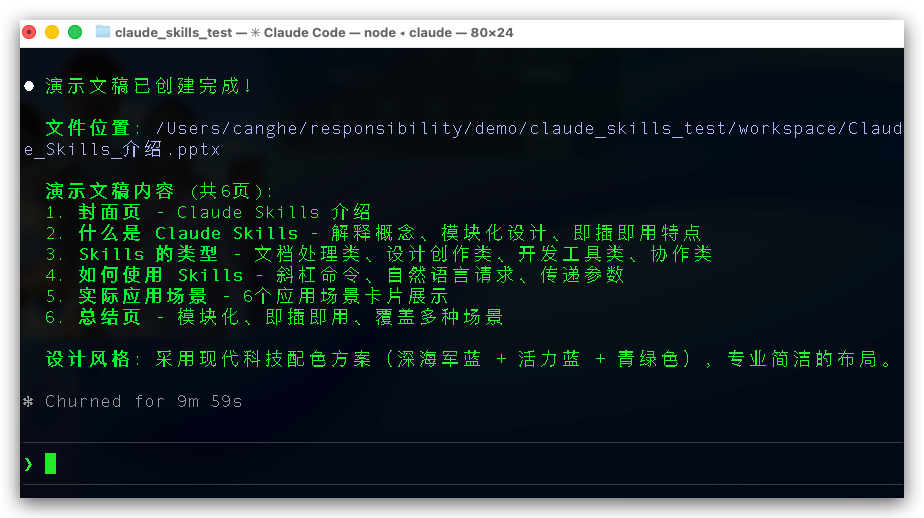

用 pptx skill 创建一个关于 Claude Skills 的演示文稿

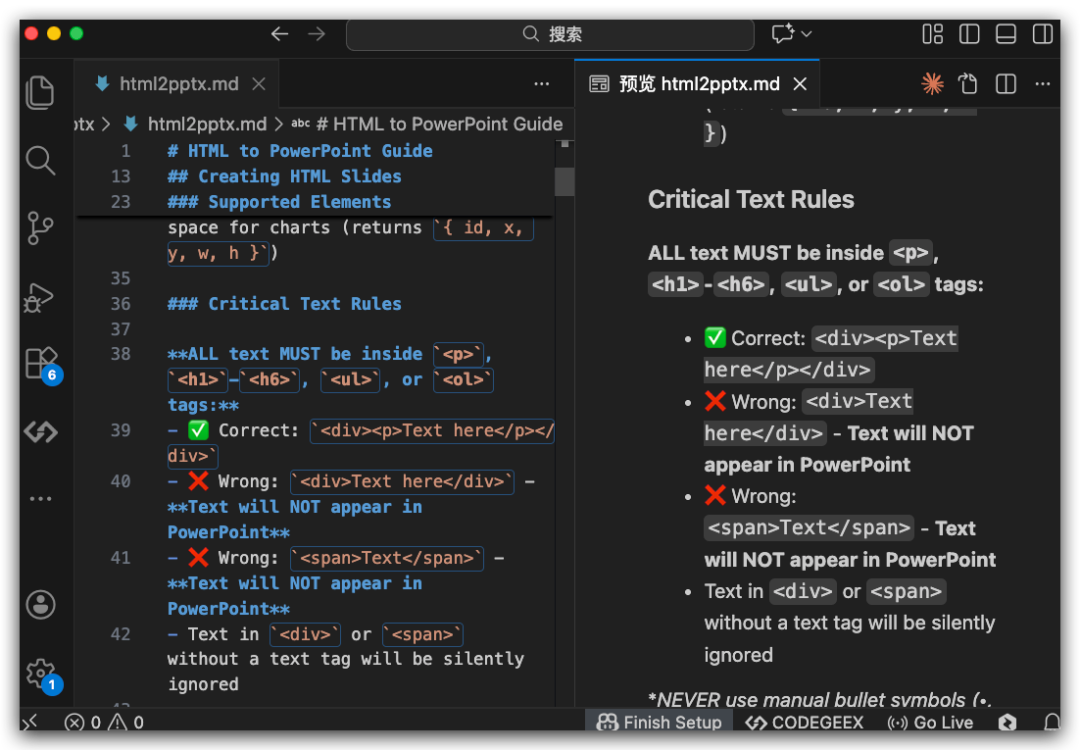

可以看到先是去用 HTML 来写 PPT,然后调用 pptx skill 里面的 html2pptx.md 约束,把 html 转为 PPT。

而这个约束文件 html2pptx.md 是对 html 转 PPT 的一些约束规则和条件,通过 markdown 的形式约束了。

Claude Skills 经过一番苦战,终于完成了。

我们来看下效果:

靠,非常不错啊。一个做 PPT 的 skill 就这样安装好了。

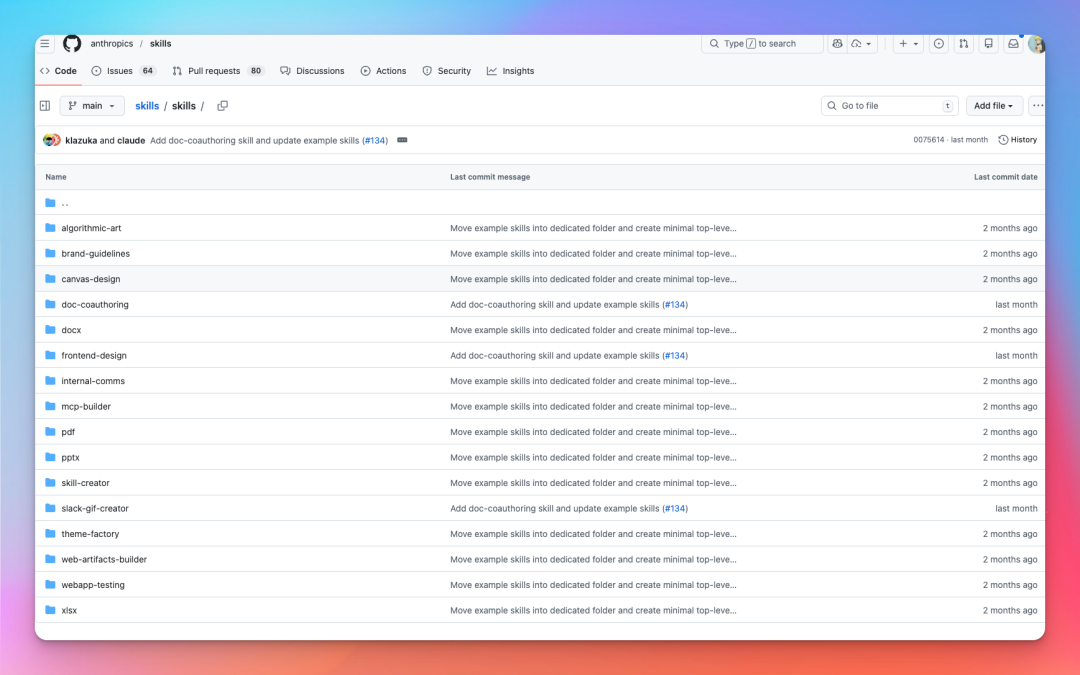

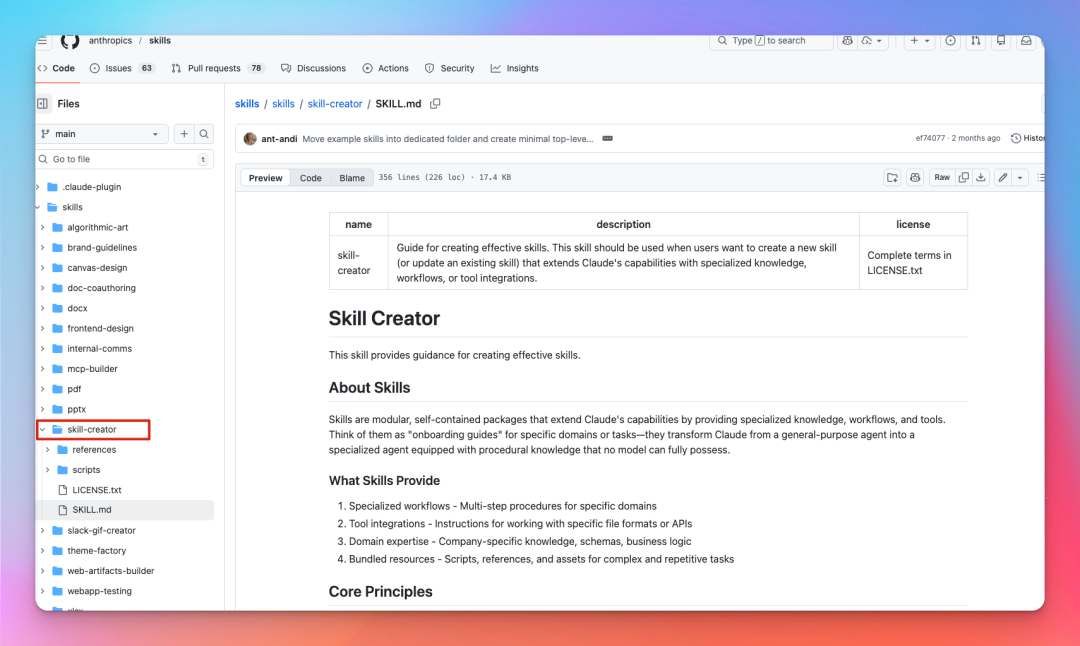

Anthropic 官方的 skill 仓库提供了不少有用的 skill,开源地址如下:

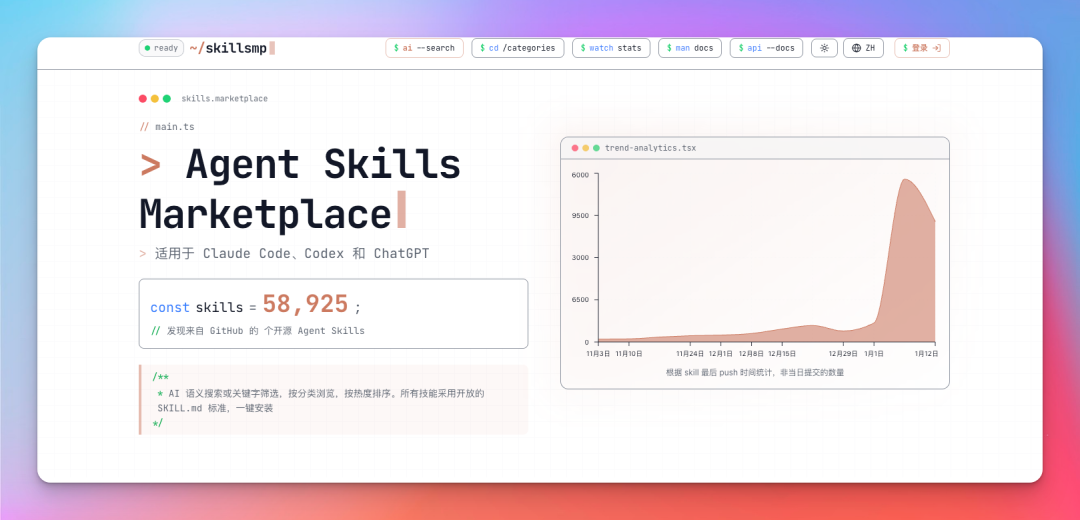

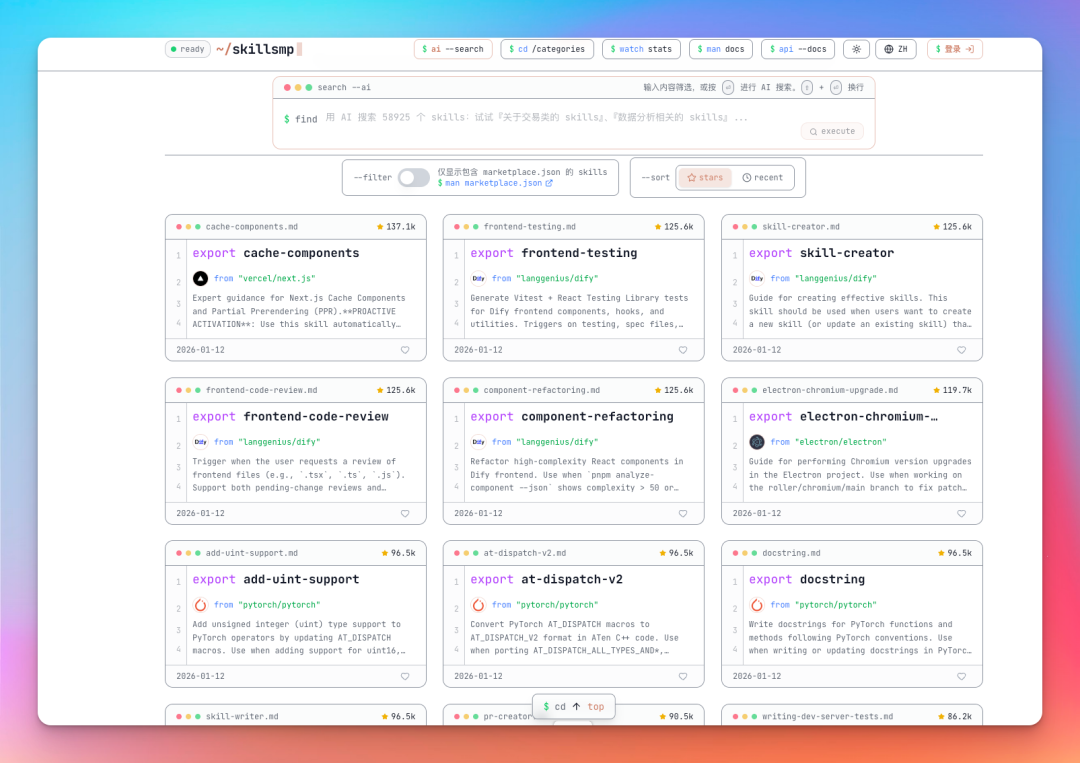

也可以在 Skills 市场找到非常多的 skill:https://skillsmp.com/zh

目前已经有 58925 个 Skills 了:

然后可以在这里用 AI 来搜索你想要的 Skills,也可以按照分类查找。

那都有哪些必装的 skill 呢?给大家推荐几款:

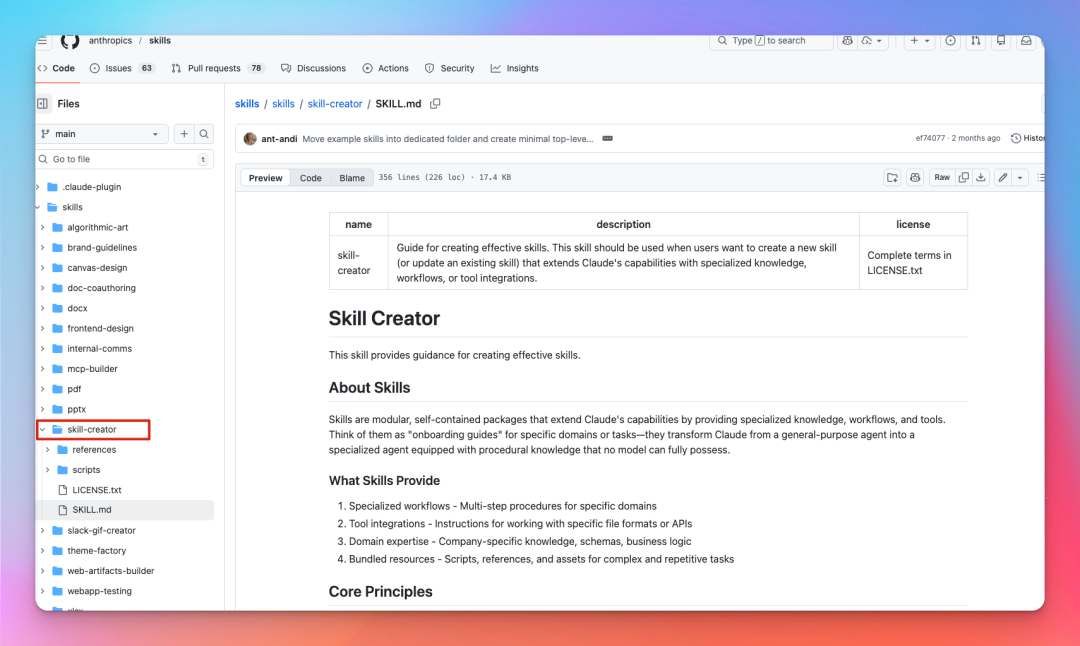

Anthropic 官方出品,能够自动写 skill 的 skill。

地址:https://github.com/anthropics/skills/tree/main/skills/skill-creator

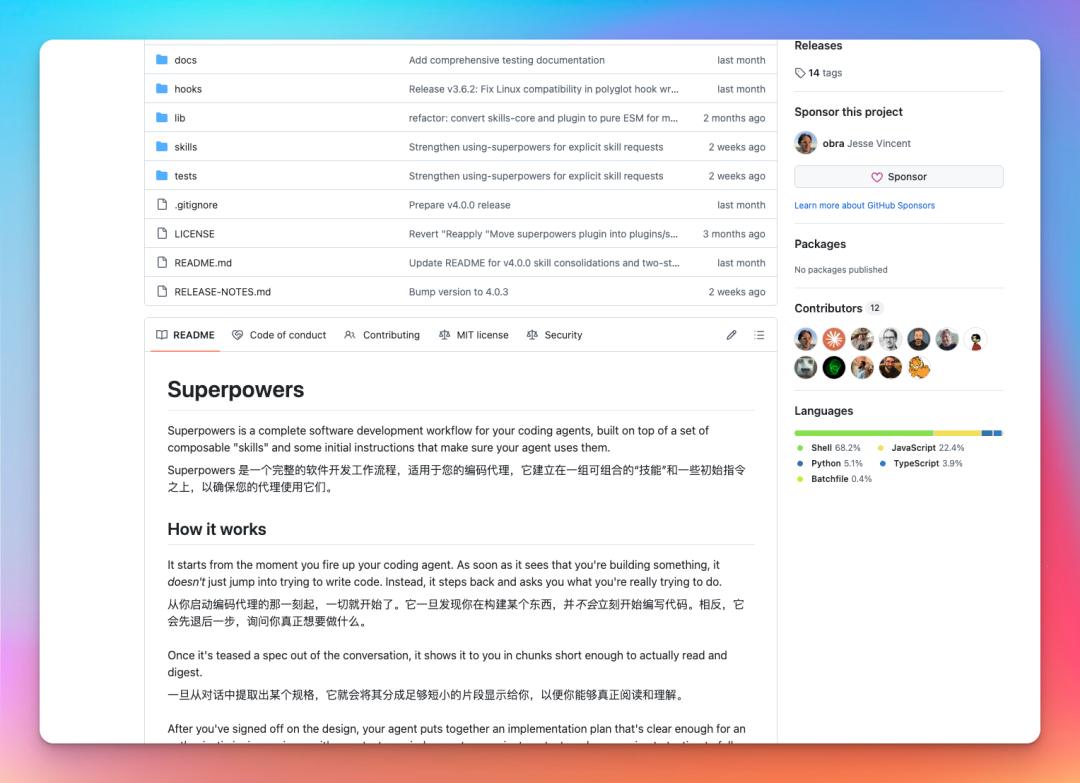

这个是一个完整的软件开发工作流程的 skill,包含需求文档、开发、测试等流程。

地址:https://github.com/obra/superpowers

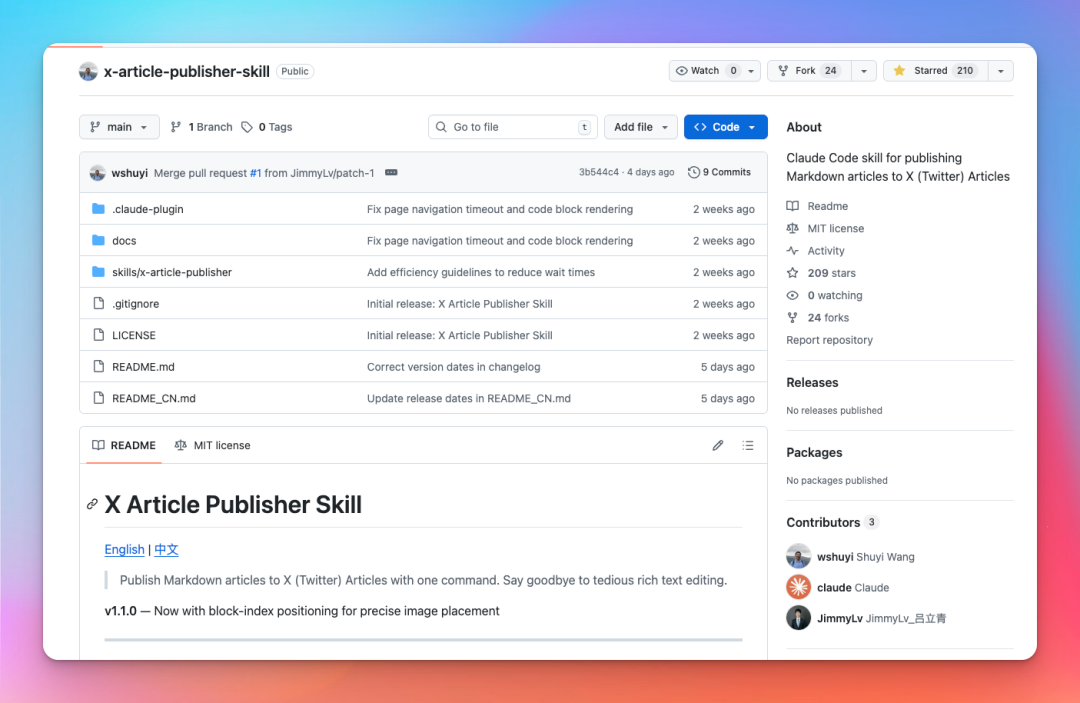

可以很方便的用来写 X 文章,早就有这个痛点,没想到这么快就有对应的工具了。

地址:https://github.com/wshuyi/x-article-publisher-skill

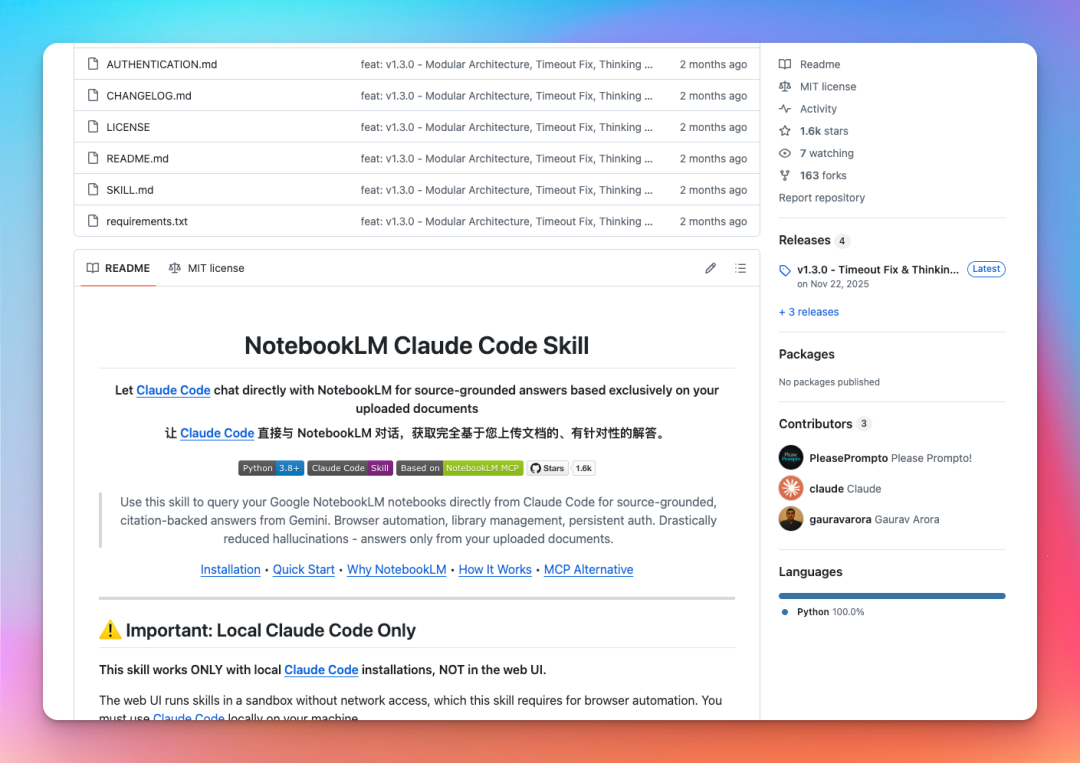

能在 Claude Code 里面直接和 NotebookLM 对话,并上传 PDF 直接到 NotebookLM。

地址:https://github.com/PleasePrompto/notebooklm-skill

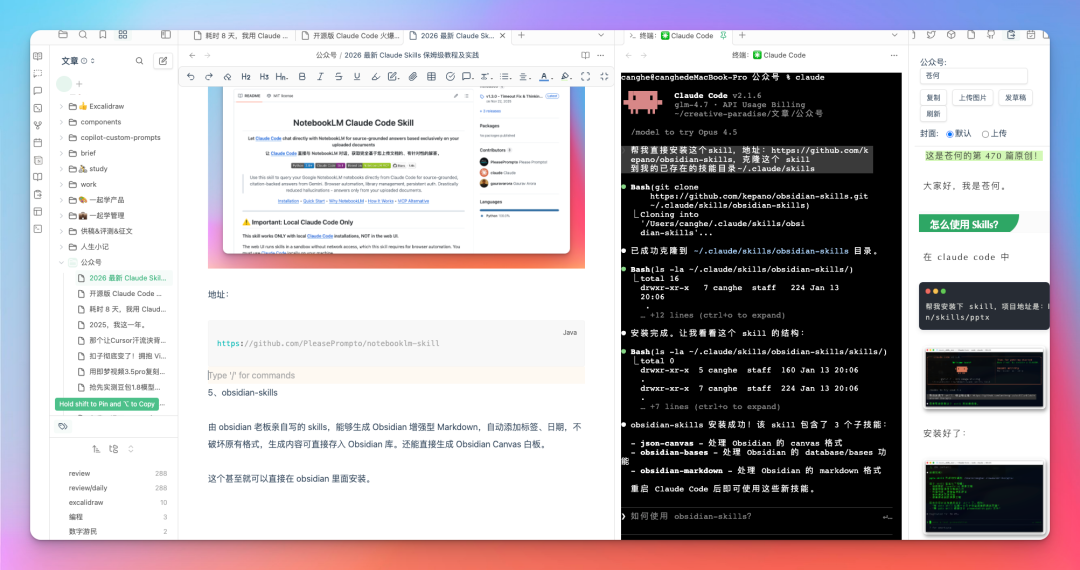

由 obsidian 老板亲自写的 skills,能够生成 Obsidian 增强型 Markdown,自动添加标签、日期,不破坏原有格式,生成内容可直接存入 Obsidian 库。还能直接生成 Obsidian Canvas 白板。

这个甚至就可以直接在 obsidian 里面安装。

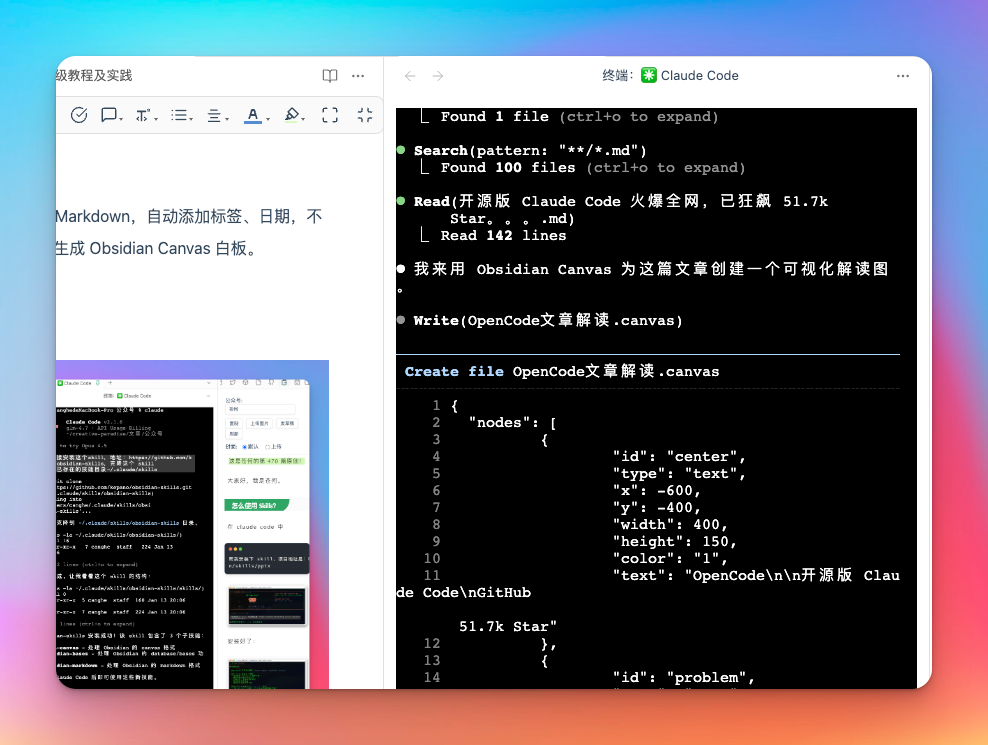

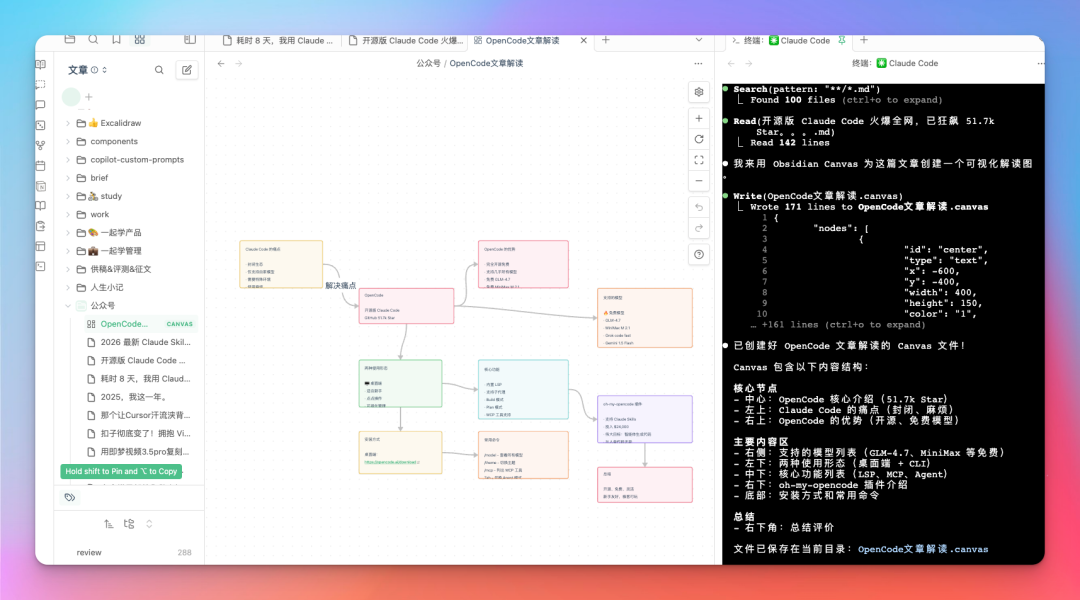

然后输入提示词:用obsidian-skills画一个 canvas 来解读这一篇文章”开源版 Claude Code 火爆全网,已狂飙 51.7k Star。。。”

可以看到,图就很快出来了:

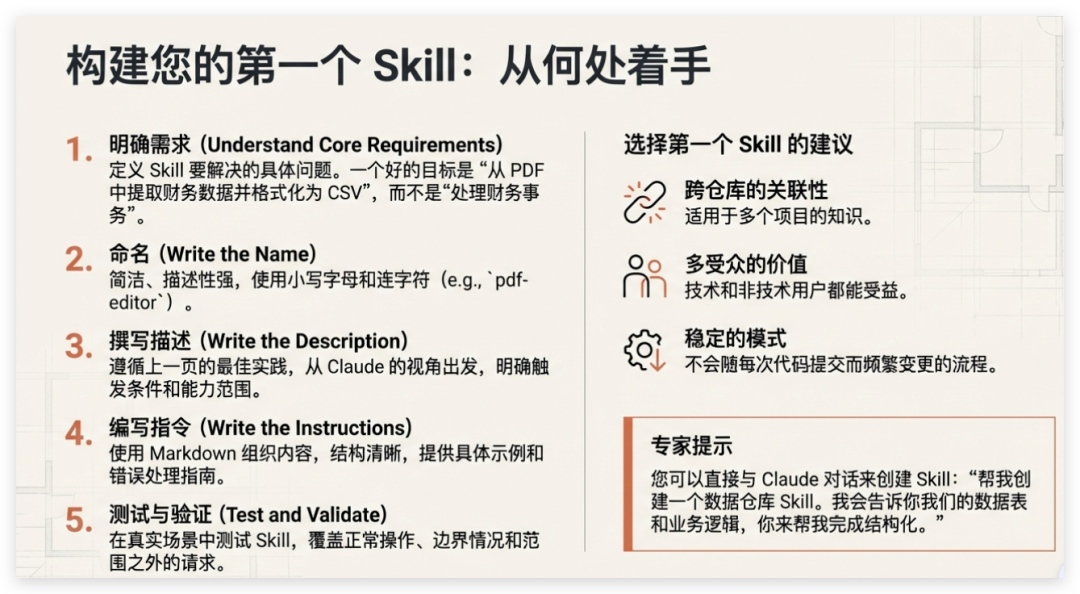

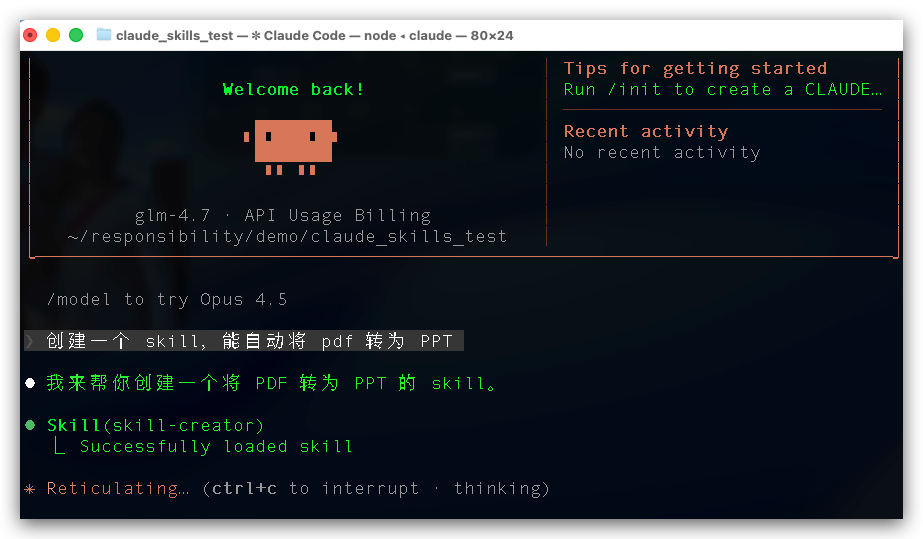

非常推荐大家先安装 Anthropic 官方的一个 skill:skill-creator,他是专门用来安装 skill 的 skill。

按照同样的方法先安装这个 skill:

帮我直接安装这个skill,地址:https://github.com/anthropics/skills/blob/main/skills/skill-creator,克隆这个 skill 到我的已存在的技能目录~/.claude/skills

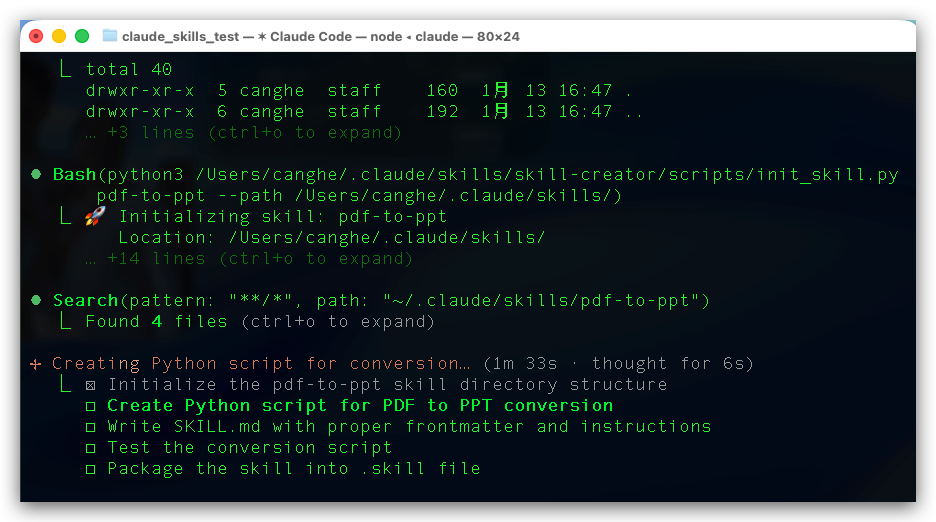

下面就可以在 CC 里面快速的创建 skill

创建一个 skill,能自动将 pdf 转为 PPT

开始一顿操作来创建这个 skill 了:

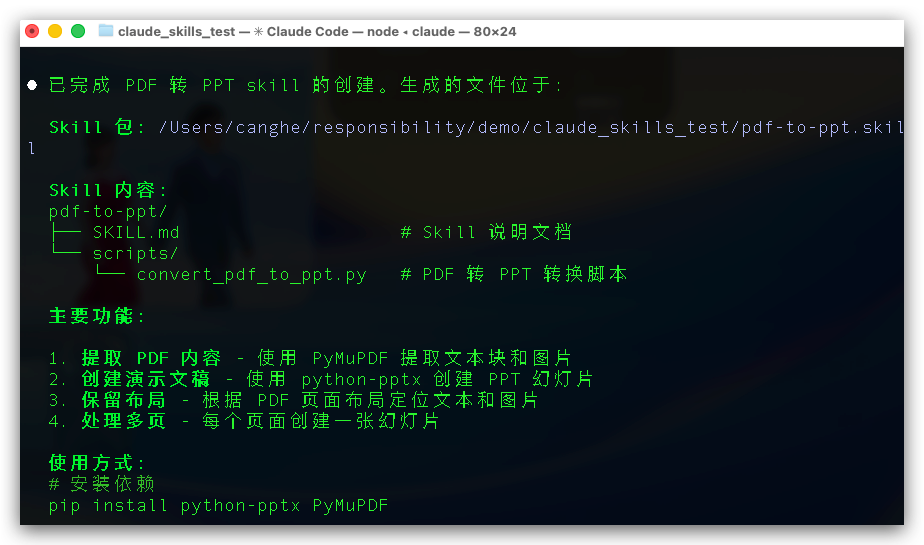

接下来就什么都不用管了,很快就生成好了这个 skill:

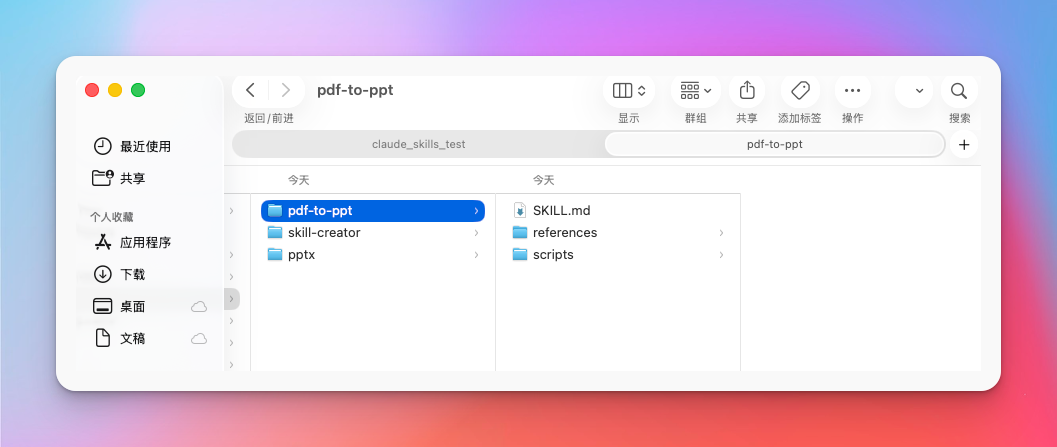

对应的文件夹也生成 ok 了。

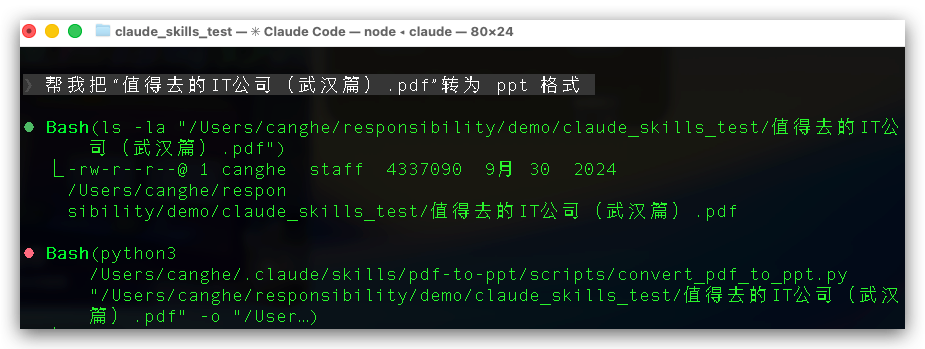

来做下验证,输入以下提示词:

帮我把“值得去的IT公司(武汉篇).pdf”转为 ppt 格式

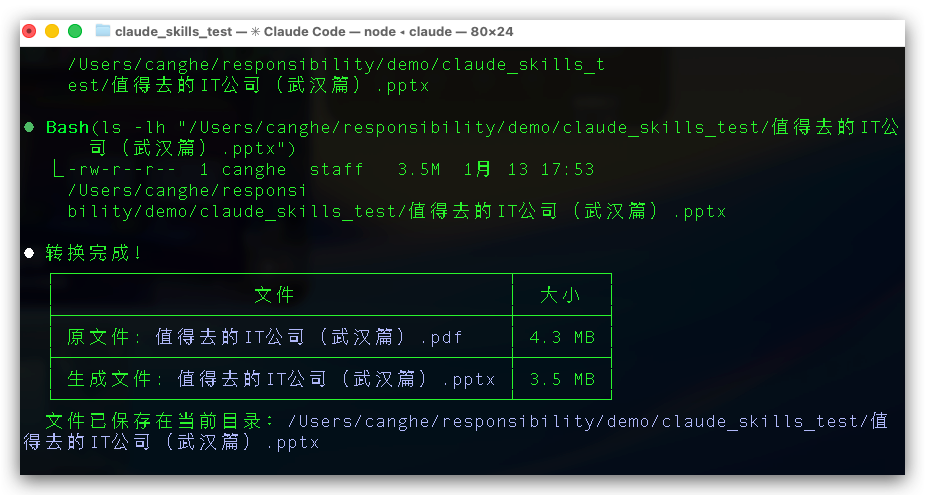

转好了:

打开项目看一下:

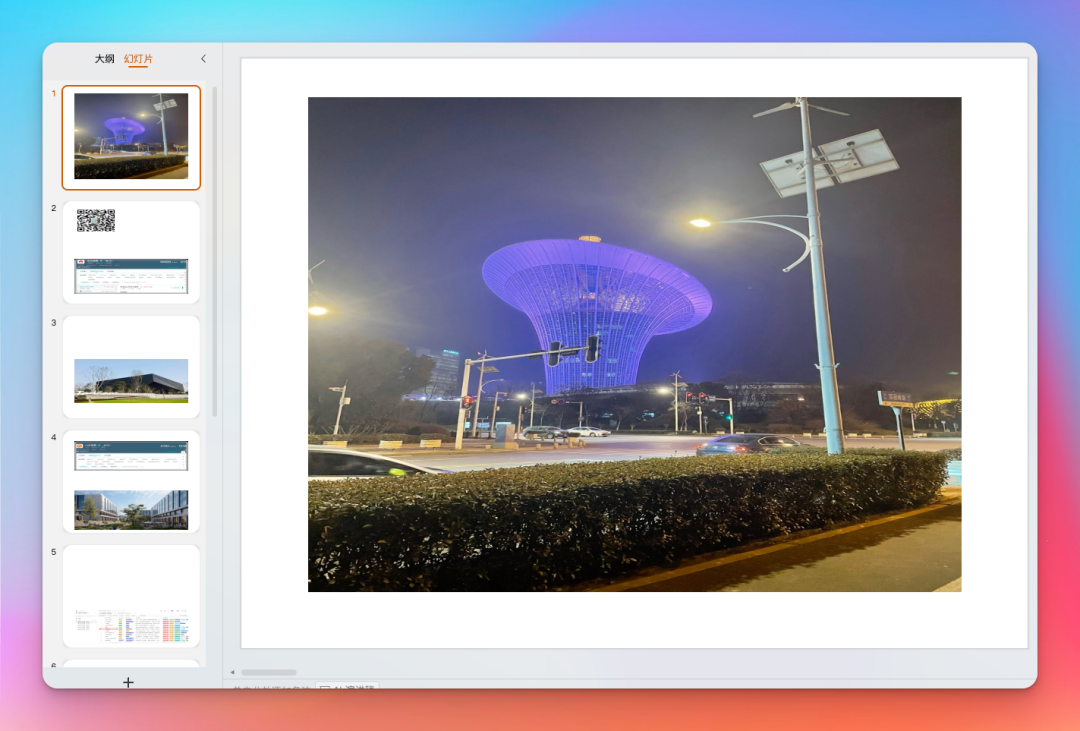

来看看效果,没什么问题:

做一个 skill 几分钟时间,非常方便。

Skills 改变了我们与 AI 协作的基本方式。它们将一次性的提示,转变为持久、可组合的知识资产。

通过为 AI 建立一个可扩展的程序性记忆库,Skills 正在为下一代代更强大、更自主、更能与人类专家无缝协作的 AI Agent 奠定基础。

Skills 把各种经验和方法打包成技能包,降低了跨行使用的成本,普通人也更加方便的创作自己的 Agent 了。

我觉得,掌握 Skills,就是掌握了将组织智慧规模化的能力。

未来,Skills 还会一如火爆,会有越来越多的 Skills 出来。

好了,今天的文章就到这里了,谢谢你喜欢我的文章,我们下期见。

本文由人人都是产品经理作者【苍何】,微信公众号:【苍何】