- 字节跳动推出Infinity模型,实现自回归文生图新突破,性能超越Stable Diffusion3。采用Bitwise Token框架,代码已在GitHub上线。

- 阿里云携手黑芝麻智能,成功将通义千问大模型移植到武当C1200车规级芯片,实现智能汽车离线多轮自然对话功能。

- Autodesk推出”Bernini”生成式AI大模型,专为3D设计而生,支持将文本与草图转化为3D文件并生成中空结构。

- 阿里云与雷鸟创新达成独家战略合作,通义系列大模型将为雷鸟的产品提供技术支持,即将推出V3AI拍摄眼镜。

- 微软研究团队推出”大型行动模型”(LAM)技术,能自主执行Windows程序。在Word测试中完成任务的概率达71%,超过GPT-4o的63%。

- 英伟达推出GB300 AI服务器,采用水冷散热,搭载B300 GPU和288GB HBM内存,显著提升性能与稳定性。

- 斯坦福大学推出开源AI写作系统STORM&Co-STORM,结合必应搜索与GPT-4o mini技术,支持多视角对话。

热门文章

Building a Collaborative Community: An AI Club for Product Managers2024-11-11

Building a Collaborative Community: An AI Club for Product Managers2024-11-11 独家首发 | 全球价值投资与时代2025-01-04

独家首发 | 全球价值投资与时代2025-01-04 拆解 | 88元, 国产开源ESP32小智AI机器人,搭载DeepSeek、通义Qwen2.5-Max2025-04-14

拆解 | 88元, 国产开源ESP32小智AI机器人,搭载DeepSeek、通义Qwen2.5-Max2025-04-14 全世界最懂大模型的两个产品经理,一起聊怎么做AI产品2024-11-24

全世界最懂大模型的两个产品经理,一起聊怎么做AI产品2024-11-24 过去一年,我们推荐这些书多模态新一轮争锋 | 豆包、DeepSeek、月之暗面多模态能力重大更新过去一年,我们推荐这些书2025-01-29

过去一年,我们推荐这些书多模态新一轮争锋 | 豆包、DeepSeek、月之暗面多模态能力重大更新过去一年,我们推荐这些书2025-01-29 终于有人把ERP、CRM、OA、HR系统、财务系统之间的关系说清楚了2024-11-30

终于有人把ERP、CRM、OA、HR系统、财务系统之间的关系说清楚了2024-11-30

快讯

拆解 | 88元, 国产开源ESP32小智AI机器人,搭载DeepSeek、通义Qwen2.5-Max

AI智能硬件大爆发,总有一款适合你,

小智AI聊天机器人是一款基于乐鑫ESP32-S3核心板,搭载多种开源AI大模型( DeepSeek、OpenAI 、通义Qwen),通过对话角色自定义、海量知识库、长期记忆、语音声纹识别等功能。它不仅是智能工具,更是一个真正“懂你”的AI助理,致力于为每一天增添温暖和便利。无论是在解决问题还是分享快乐,小智AI聊天机器人都以独特的智慧和温柔的方式,使科技更加有温度,生活更美好。

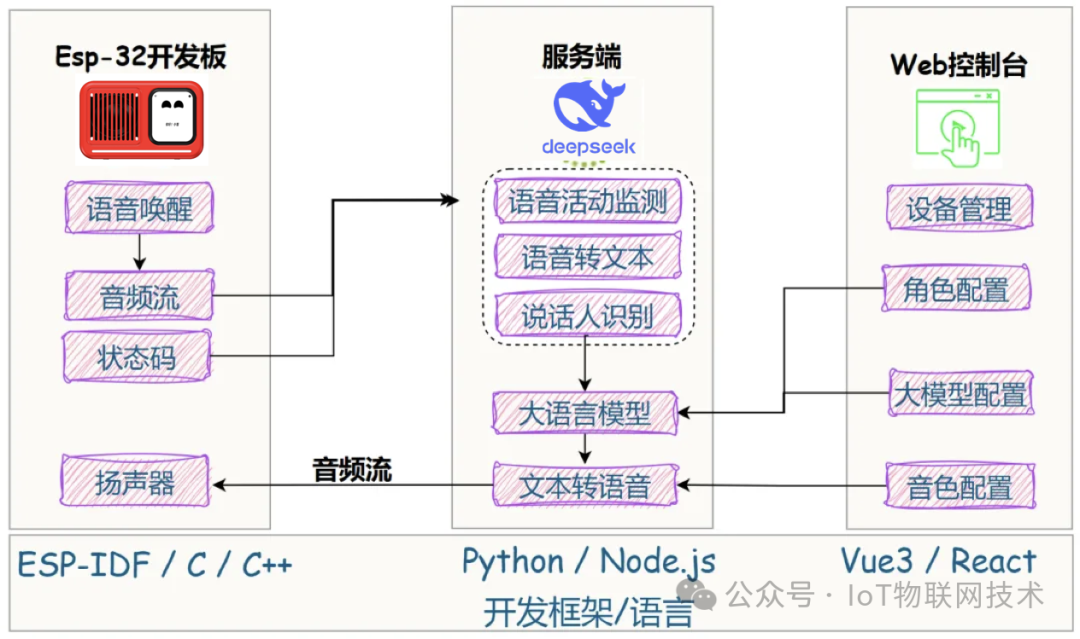

- 通信协议基于 xiaozhi-esp32 协议,通过 WebSocket 实现数据交互。

- 对话交互支持唤醒对话、手动对话及实时打断。长时间无对话时自动休眠

- 多语言识别支持国语、粤语、英语、日语、韩语(默认使用 FunASR)。

- LLM 模块支持灵活切换 LLM 模块,可选阿里通义Qwen、DeepSeek、OpenAI 等

- TTS 模块支持 EdgeTTS(默认)、火山引擎豆包 TTS 等多种 TTS 接口,满足语音合成需求。

小智AI聊天机器人功能介绍

无论是科学知识、历史文化,还是生活常识、娱乐八卦,小智AI聊天机器人都能信手拈来,让每一次对话都充满趣味和惊喜。用户可以与小智AI聊天机器人畅聊各种话题,从宇宙奥秘到生活琐碎,都能得到有趣又有心意的答复。

此外,小智AI聊天机器人还支持对话角色自由切换定制,不同性格特长的对话角色可以满足用户的一切对话需求。无论是幽默风趣的喜剧演员,还是博学多才的学者,亦或是温柔体贴的知心朋友,小智AI聊天机器人都能轻松扮演,为用户带来百变的AI 身份体验。

智能交互能力

- 离线语音唤醒:通过 ESP-SR 实现。

- 流式语音对话:支持 WebSocket 和 UDP 协议。

- 声纹识别:识别说话者身份。

- 短期记忆:对每轮对话进行总结。

- 自定义角色:支持提示词和音色设置。

- LCD 显示屏:显示emoji及对话内容。

- 大模型:可接入DeepSeek、OpenAI 、通义千问等

- 联网能力:支持 Wi-Fi 和 4G 双网络接入

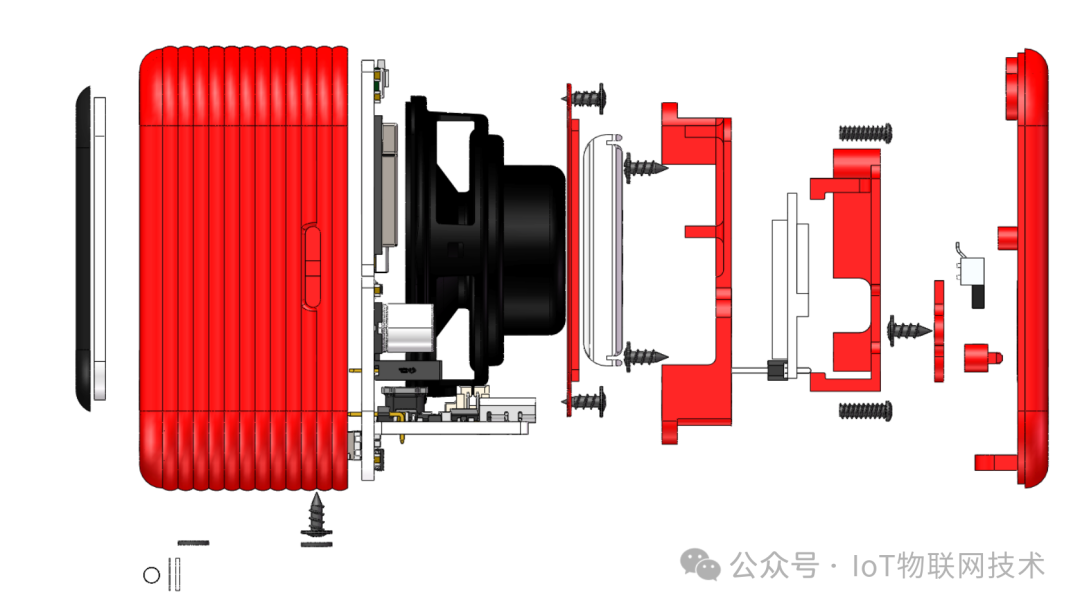

3D打印外壳

小智AI聊天机器人外壳采用3D建模一体成型打印,优势如下:

- 简易安装:结构设计优化,仅需一枚螺丝即可完成组装,省时省力。

- 精致贴合:面板贴合工艺,确保外观简洁流畅,细节无缝衔接。

- 舒适视角:屏幕窗口设计符合人体工程学,提供舒适的桌面可视角度。

- 麦克风隔离:独立隔离空间有效提升麦克风拾音效果,减少噪音干扰。

- 稳固防护:底部配备防滑脚垫,确保设备稳固放置,同时防止刮花桌面。

- USB-C 接口兼容性:USB-C 口设计精确,兼容磁吸和普通连接线,满足多种使用需求。

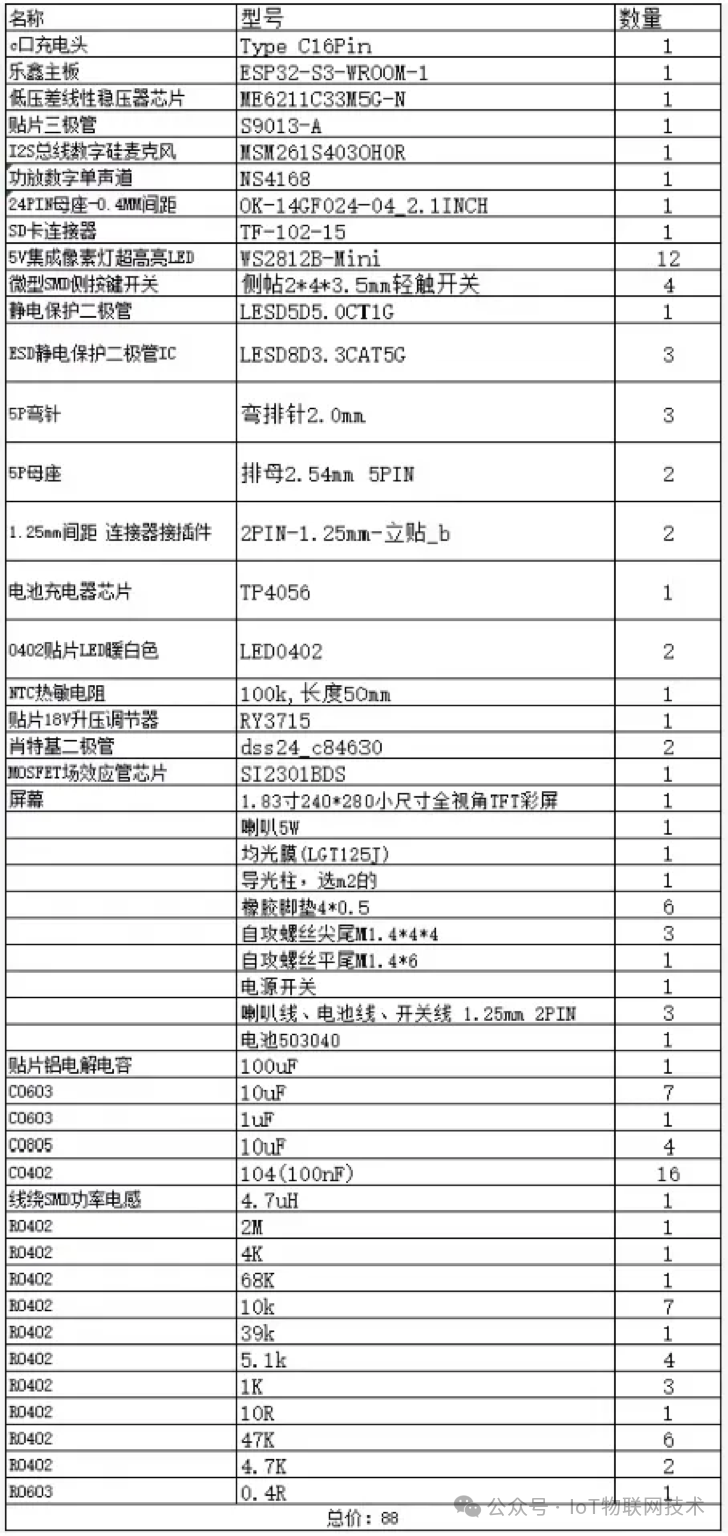

小智AI聊天机器人硬件

小智AI聊天机器人核心设计:

- 主控芯片 ESP32-S3-WROOM-1-N16R8 通过 SPI 接口驱动 1.28 寸圆屏,提供 240x240 分辨率的高质量显示。

- 电源管理模块确保设备在 5V 输入下稳定运行,适合 USB 供电。

- ES8311 提供高性能音频处理,支持麦克风输入和扬声器输出。

供电管理:

- 集成电源管理 IC,确保供电稳定,避免电源波动导致的设备异常。

信号处理:

- SPI 通信的时钟频率优化为 40MHz,确保数据传输高效无误。

小智AI聊天机器人核心模块清单如下:

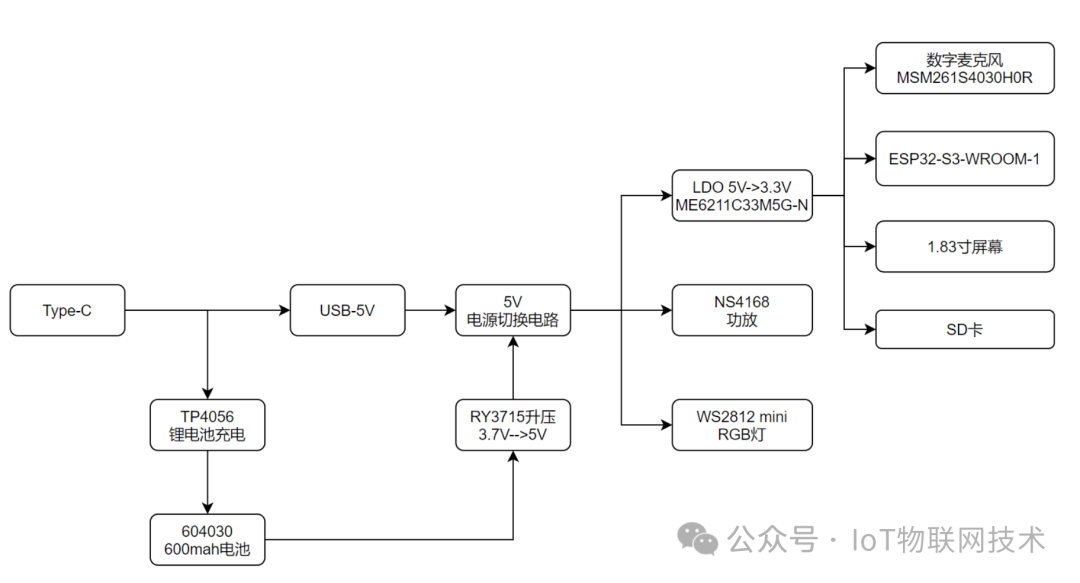

小智AI聊天机器人电路设计

首先经过type-C 输入的5V电压,默认type-c输入5V,5V给TP4056给锂电池充电,同时锂电池通过升压电路将电压升到5V,通过电源转换电路实现5V输出,当有USB插入时候,5V电源自动切换到USB5V电源路径,当USB电源断开时候实现锂电池升压5V输出,两路电源切换,在切换时候整个系统不会断电;5V电压通过LDO线性稳压实现3.3V输出,3.3V电源分别给主控ESP32、SD卡、数字麦克风和屏幕供电,此外5V还直接给功放和RGB灯供电。

外壳采用防滑纹路设计,底部有4个防滑垫片,可以放置与桌面而不出现打滑情况,屏幕镶嵌与前外壳,美观而有不会出现平置出现划痕,后盖使用响枪加下螺丝锁住,螺丝不外露同时能卡住后外壳,整体简洁美观。

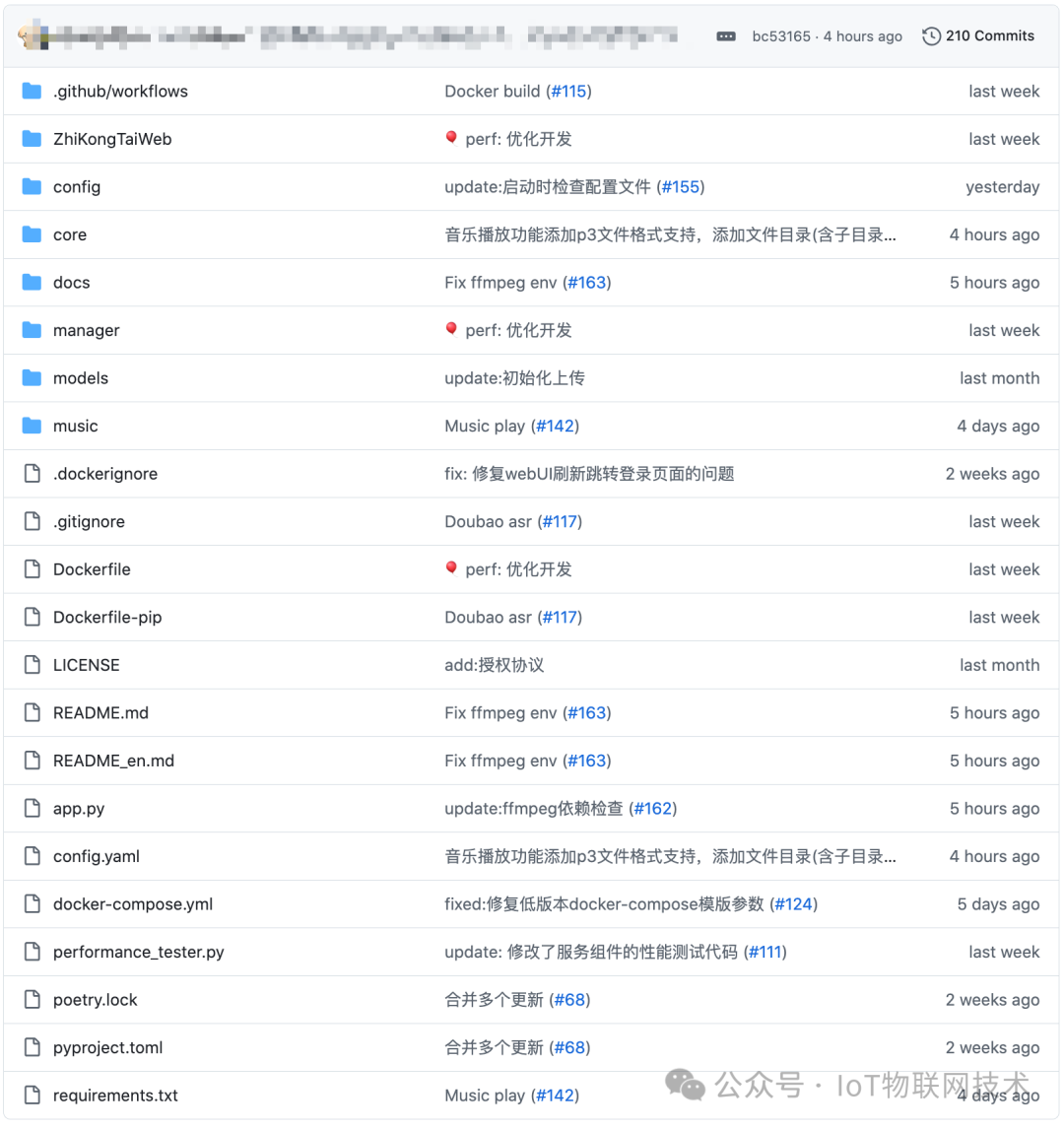

小智AI聊天机器人硬件源码

小智AI聊天机器人项目源码:

https://github.com/78/xiaozhi-esp32小智AI聊天机器人服务端源码

服务端每个功能模块采用独立的目录,便于开发和维护,提高系统的可扩展性和可维护性。

asr-server+asr-worker:提供语音活动监测、语音转文本、说话人识别等服务;tts-server:提供音色管理、音色克隆、语音合成等服务,对接本地部署的语音模型;main-server:主服务,负责协调语音识别、大模型、语音合成等各种服务,并对接后端数据库。

小智AI聊天机器人服务端源码:

https://github.com/xinnan-tech/xiaozhi-esp32-server

Amazon 上也已经有了同类产品

榜单速递:AI销售助手到智能求职工具,5大创新应用实战解析

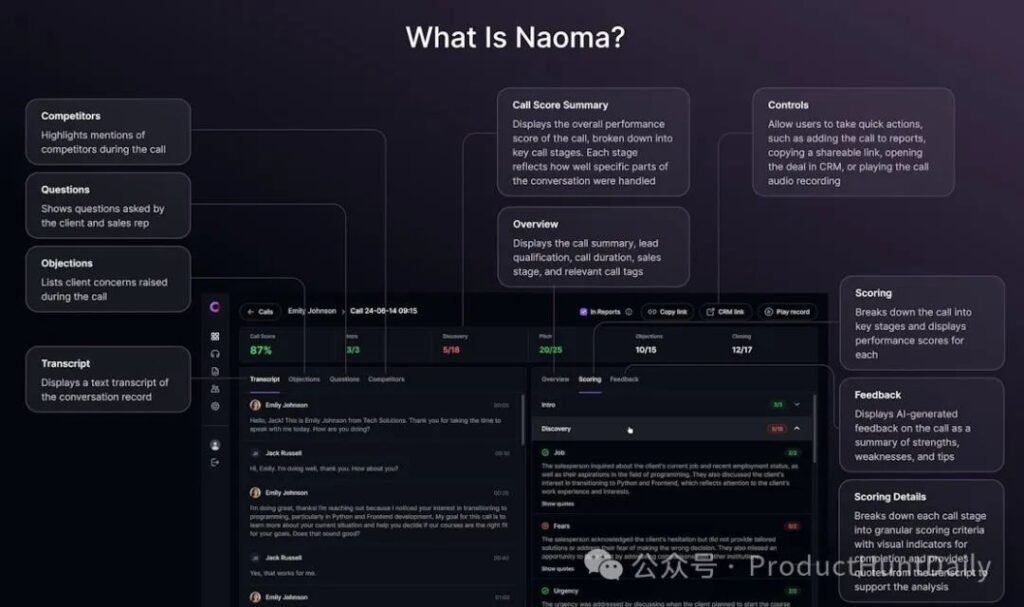

- Naoma: 销售效率提升 (539)

- OpenJobs AI: 智能求职匹配 (444)

- Piano Widget: 音乐创作工具 (271)

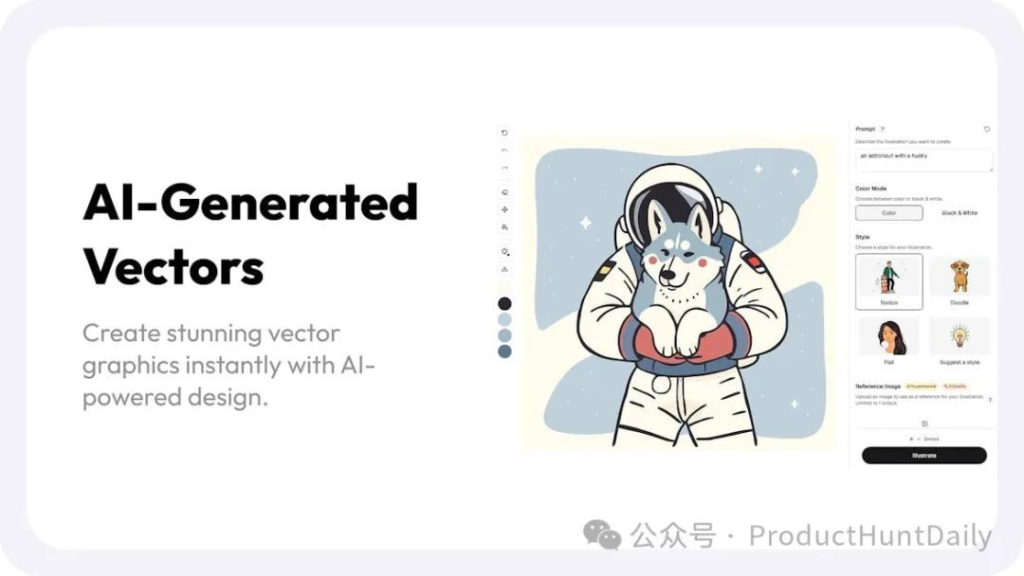

- illustration.app: AI矢量插图生成 (258)

- Googly Eyes: 桌面趣味互动 (238)

- TimeDive: 历史解谜游戏 (143)

1. Naoma

票数:539

官网链接:https://naoma.ai

标签:销售、人工智能、数据分析

一句话介绍:Naoma通过AI深度分析CRM中的销售对话数据,识别顶尖销售人员的沟通模式并提供个性化改进建议,帮助销售团队规模化复制成功经验,解决传统人工复盘效率低、经验难沉淀的痛点。

小编解读:中国电销团队常面临新人培训周期长、优秀话术难复制的困境,Naoma的"关键节点标记+脚本对齐分析"模式可启发开发本地化版本,比如结合微信语音记录分析,将销冠的破冰技巧自动转化为可执行SOP。2. OpenJobs AI

票数:444

官网链接:https://www.openjobs-ai.com

标签:人工智能、智能搜索、职业规划

一句话介绍:该产品通过自然语言对话精准匹配职位需求,为求职者提供智能优化的一站式解决方案,高效解决海投低效、简历优化难的痛点。

小编解读:国内求职市场信息过载严重,可借鉴其自然语言处理技术,结合BOSS直聘等平台用户行为数据,构建更精准的岗位需求预测模型,降低求职者试错成本。3. Piano Widget

票数:271

官网链接:https://apps.apple.com/app/id6450440460

标签:iOS、音乐、苹果应用、乐器模拟、创意小部件

一句话介绍:该产品通过无需启动应用即可在Widget、锁屏和动态岛弹奏的高质量钢琴模拟器,结合30多种乐器音色和沉浸式触控体验,解决了音乐爱好者随时捕捉灵感的需求,让创作和学习更加便捷高效。

小编解读:在国内移动应用高度竞争的背景下,该产品巧妙利用iOS系统的小组件和动态岛特性,将工具与场景无缝融合,为国内开发者展示了如何通过系统级入口提升用户使用频次——比如在锁屏界面直接调取钢琴,这种“零步触达”的设计极大降低了创作门槛。4. illustration.app

票数:258

官网链接:https://www.illustration.app

标签:设计工具、SaaS、人工智能

一句话介绍:该产品通过AI技术实现秒级生成可定制矢量插图,解决了设计师、开发者和营销人员在寻找个性化视觉素材时面临的版权限制、高成本和创作门槛问题,提供可商用的一键式高质量图形解决方案。

小编解读:国内UI设计和自媒体领域长期面临「版权贵」「改图难」痛点,该产品的AI即时生成模式为中小团队提供合规素材新思路,其SVG输出特性尤其适配国内设计师高频迭代的移动端适配需求。5. Googly Eyes

票数:238

官网链接:https://sindresorhus.com

标签:Mac,菜单栏应用,趣味工具,光标互动,桌面美化

一句话介绍:该产品通过在Mac菜单栏嵌入动态眼睛,实时跟随光标移动并在点击时触发眨眼动画,为追求个性化办公体验的用户提供轻松解压的视觉互动,缓解工作疲劳。

小编解读:国内办公软件普遍缺乏趣味性交互,参考该产品“光标感知+微动画”的设计逻辑,可开发融入二十四节气动效或国风元素的菜单栏插件,满足年轻用户对工作场景娱乐化的需求,同时保持工具轻量化避免干扰效率。6. TimeDive

票数:143

官网链接:https://apps.apple.com/us/app/timedive-guess-year-place/id6739967794

标签:用户体验、免费游戏、历史解谜、多人竞技、地理挑战

一句话介绍:这款通过观察建筑细节与地理线索进行时空解谜的游戏,以多人竞技和每日挑战模式,让历史爱好者和推理玩家在趣味积分赛中感知时代变迁。

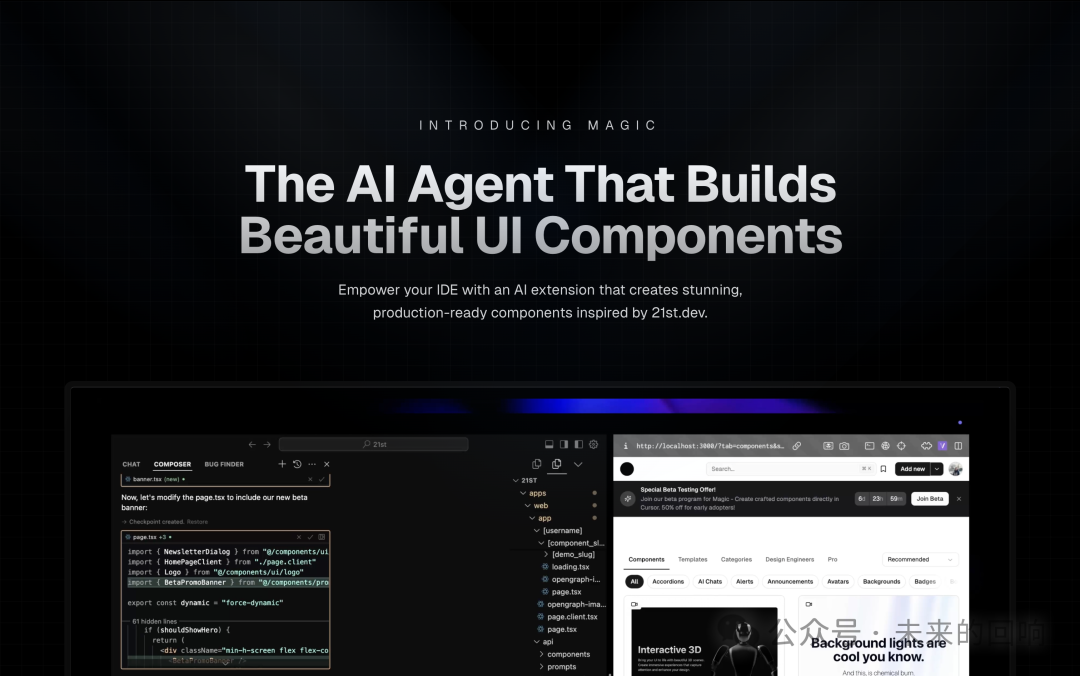

小编解读:TimeDive的"画面线索+地图定位+时间竞猜"玩法,为国内知识类游戏提供新思路——可结合本地历史地标设计关卡,用短视频传播高光推理片段,增强社交传播性。如何在Cursor中使用Magic MCP生成好看的UI(第二期)

大家好,上一期关于Magic MCP的教程大家反响十分强烈,这一期分享一下我在使用Magic MCP的小技巧。

一、针对上一期《如何在Cursor中使用Magic MCP生成好看的UI》中部分内容的勘误

1、首先,先对上一期文章做个勘误,在测试在Cursor中调用Magic MCP时提示调用出错了,打开里面的调用结果提示502的服务器错误。

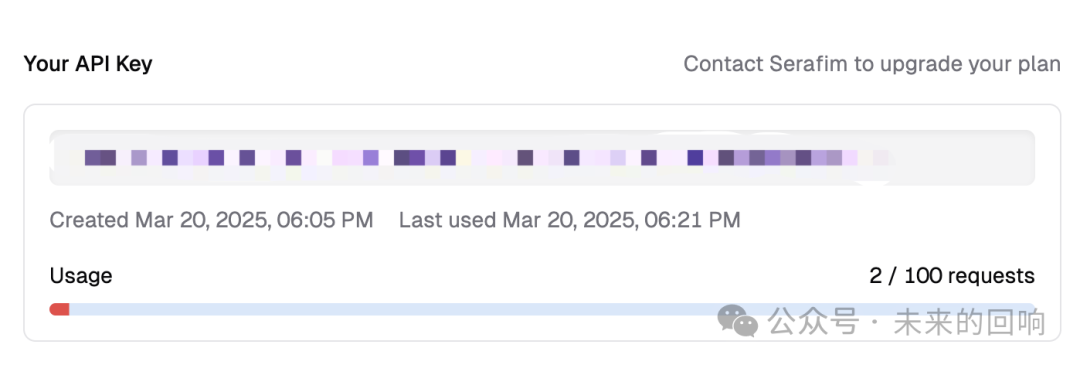

2、但是这时在21st.dev的官网API页面查看调用居然是成功的!消耗了2个request,就挺无语。怀疑是输出太长导致的超时,但是目前还没有找到具体配置调用MCP server超时时间的方法。

3、因此上一期的网站实际上是使用了Claude 3.7 thinking原生的设计能力完成的UI设计,在此对上一期中出现的纰漏和大家道个歉。

4、实际上Claude 3.7 thinking设计能力非常强悍,审美也是相当之高,单纯使用Claude3.7去做产品原型设计或者网站UI设计也是几乎可以满足需求的,这里再给大家分享一下做的另外一个测试网站。

二、在Cursor中使用Magic MCP的一些小技巧

1、使用 /ui 快速调用Magic MCP。

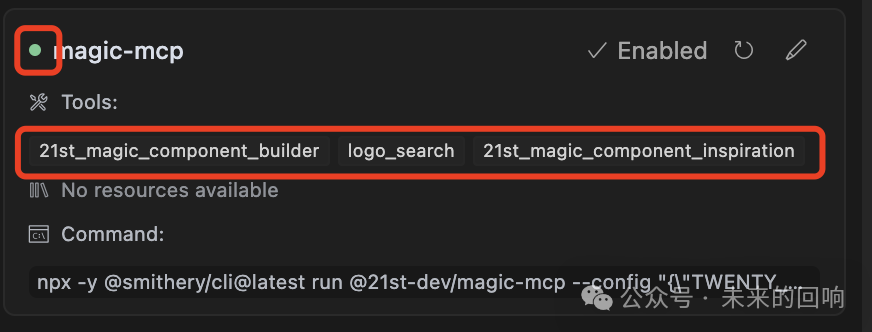

2、多次测试发现使用smithery.ai生成的命令在Cursor中调用Magic MCP不稳定,换成官方的mcp.json就正常了。cursor setting的MCP页面中才能看到Magic MCP服务器显示绿色可用状态,可以看到可以使用的tools。

"magic-mcp": {"command": "npx","args": ["-y","@21st-dev/magic@latest", "--config","API_KEY=\"YOUR-KEY\""]}

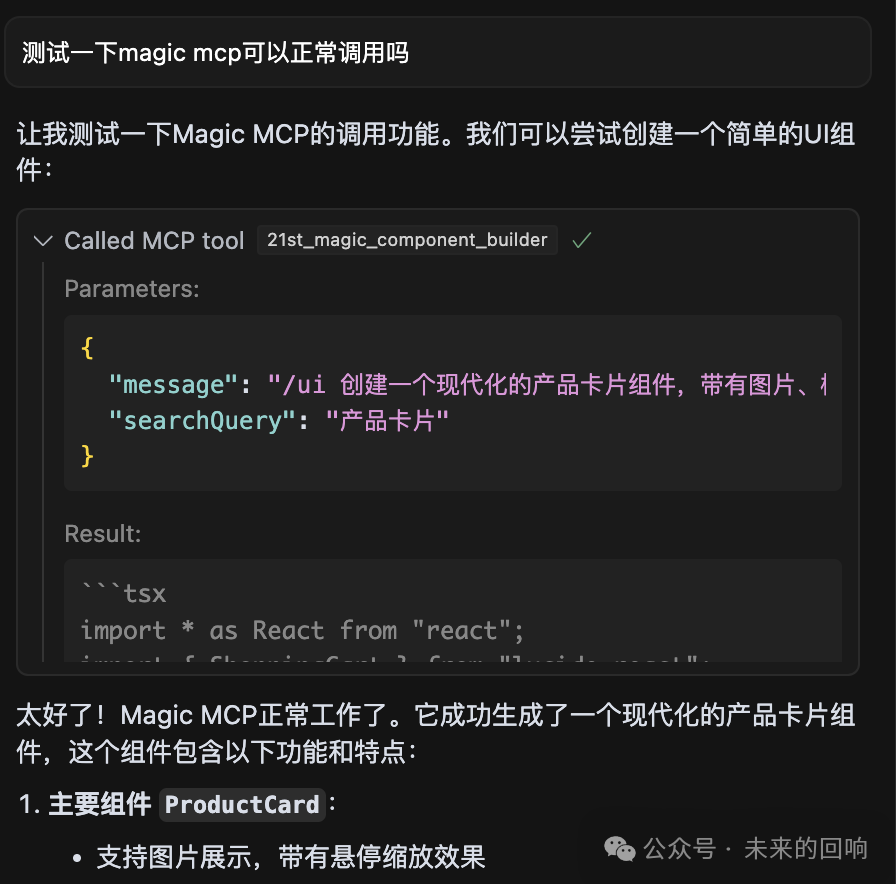

3、因此目前我会在使用前先问一下当前这个Magic MCP是否可以正常调用。如果result中可以正常返回代码说明可以调用成功。

4、目前在Cursor中使用Magic MCP问题还比较多,有条件也可以在Claude客户端中使用。

更多关于AI工具、Cursor、MCP相关的教程和资讯请持续关注我后续的分享!

-END-

AI top 50 强:AI Agent 超越 Chat

2025 年版的 AI 50 强名单显示了公司如何使用代理和推理模型来承担真实的企业工作流程

人工智能将在 2025 年进入一个新的阶段。多年来,AI 工具主要根据命令回答问题或生成内容,而今年的创新是关于 AI 真正完成工作。2025 年福布斯 AI 50 强榜单说明了这一关键转变,因为其中的初创公司标志着 AI 从仅响应提示的 AI 转变为解决问题并完成整个工作流程的 AI。尽管围绕 OpenAI、Anthropic 或 xAI 等大型 AI 模型制造商的讨论很多,但 2025 年最大的变化在于这些使用 AI 产生实际业务成果的应用层工具。

从聊天机器人到完成工作流程

从历史上看,AI 助手可以聊天或提供信息,但人类仍然必须根据输出进行作。到 2025 年,这种情况将发生变化。例如,法律 AI 初创公司 Harvey 表明,其软件不仅可以回答法律问题,还可以处理从文档审查到案件预测分析的整个法律工作流程。它的平台可以起草文件、提出修改建议,甚至帮助自动化谈判、案件管理和客户联系——这些任务通常需要一个初级律师团队才能完成。这一成就使 Harvey 在福布斯 AI 50 强榜单上占有一席之地,它体现了 AI 从有用的工具演变为动手解决问题者。

引领潮流的企业工具

许多杰出的 AI 50 公司都是从事在职工作的企业工具。Sierra 和 Cursor 是初创公司 Anysphere 的唯一产品,是新一代商业 AI 的象征。Sierra 实现了客户服务自动化,同时大大改善了体验,为公司随时为客户提供帮助开辟了道路。与此同时,Cursor 已经席卷了软件开发人员社区。它的技术不仅允许任何人自动完成代码行(就像 GitHub CoPilot),而且只需用简单的英语请求即可生成整个功能和应用程序。

机器人技术正在崛起

尽管我们还没有达到公司大规模部署机器人的地步,但专注于机器人技术的初创公司在过去一年中取得了有意义的进步,因为它们将使用变压器(ChatGPT 中的“t”)构建的模型与硬件集成在一起。在 Nvidia 最近的开发者大会上,机器人技术在 Nvidia 的主题演讲中占据了突出位置,Jensen Huang 声称,“ 用于工业和机器人的物理 AI 是一个 50 万亿美元的机会 。Figure AI 最近宣布了其 BotQ 大批量制造工厂,该工厂每年能够生产 12,000 个类人机器人,以及其新的通用视觉-语言-行动 (VLA) 模型 Helix。另一家 AI 50 强公司 Skild AI 正在采取不同的方法,专注于开发通用机器人基础模型 Skild Brain,该模型可以集成到各种机器人中,而不是构建自己的机器人。Skild 还计划向使用 Skild Brain 的机器人行业销售服务。

处于 AI 生产力风口浪尖的消费者

到目前为止,日常消费者主要通过 OpenAI 的 ChatGPT 或 Anthropic 的 Claude(以及 Grok 等新来者)等聊天机器人接触到高级 AI。数亿人尝试使用这些 AI 工具,但我们还没有看到一个真正的主流应用程序,让 AI 为人们完成日常任务。这种情况可能会在 2026 年改变。随着技术的成熟,以及人们意识到 AI 如何在工作中节省时间和金钱,预计会出现一波面向消费者的 AI 产品,这些产品可以代表用户处理整个任务。例如,Anthropic 最近推出了 Claude Code,使消费者能够编写软件。这些新功能提高了 AI 能够端到端管理您的日程安排、预订旅行或组织文件的前景。

2025 年是转折点,2026 年是更广泛采用

今年是一个转折点:AI 从应答引擎毕业为工作场所的行动引擎 。2025 年 AI 50 强公司证明,AI 可以被信任处理有意义的工作负载并提供实际结果,为更广泛的采用奠定了基础。随着我们进入 2026 年,预计企业收益将开始蔓延到日常生活中。准确性和安全性等挑战仍然存在,但势头是不可否认的。到 2025 年,AI 将完成真正的工作。

Building a Personal AI Knowledge Base with Ollama+DeepSeek+AnythingLLM

DeepSeek has been subjected to a large number of overseas attacks in the past month.Since January 27,the methods of attack have been escalated.In addition to DDoS attacks,analysis has revealed that there have also been numerous brute-force password attacks,causing frequent system outages.

In the last article,someone suggested that using AnythingLLM with a wrapper would be more comfortable.Also,the official website's service has been a bit unstable.That's why I decided to try setting up a simple GUI deployment at home today.First,I need to deploy DeepSeek locally.You can refer to my previous article for this.After the deployment is complete,I'll add a wrapper.

The local deployment of DeepSeek has been specifically shared before,so I won't repeat it.You can download AnythingLLM from the official website.

https://anythingllm.com/desktopJust keep clicking"Next"until the installation is complete.Once it's installed,start the configuration.Here,select"Ollama"and fill in the address with its default setting.

Then create a workspace.

Then,set it up like this in the settings,and it should be good to go.

Then you should be able to start chatting and asking questions happily!

Resource Compilation | 32 Python Crawler Projects to Satisfy Your Appetite!

Today, I have compiled a list of 32 Python web scraping projects for everyone.

I’ve gathered these projects because web scraping is a simple and fast way to get started with Python, and it’s also great for beginners to build confidence. All the links point to GitHub, so have fun exploring! O(∩_∩)O~

- WechatSogou [1] - A WeChat public account crawler based on Sogou's WeChat search. It can be expanded to a Sogou search-based crawler, returning a list where each item is a dictionary of detailed public account information.

- DouBanSpider [2] - A Douban book crawler. It can scrape all books under Douban's book tags, rank them by rating, and store them in Excel for easy filtering, such as finding highly-rated books with over 1,000 reviewers. Different topics can be saved in separate sheets. It uses User Agent spoofing and random delays to mimic browser behavior and avoid being blocked.

- zhihu_spider [3] - A Zhihu crawler. This project scrapes user information and social network relationships on Zhihu. It uses the Scrapy framework and stores data in MongoDB.

- bilibili-user [4] - A Bilibili user crawler. Total data: 20,119,918. Fields include user ID, nickname, gender, avatar, level, experience points, followers, birthday, address, registration time, signature, and more. It generates a Bilibili user data report after scraping.

- SinaSpider [5] - A Sina Weibo crawler. It mainly scrapes user personal information, posts, followers, and followings. It uses Sina Weibo cookies for login and supports multiple accounts to avoid anti-scraping measures. It primarily uses the Scrapy framework.

- distribute_crawler [6] - A distributed novel download crawler. It uses Scrapy, Redis, MongoDB, and graphite to implement a distributed web crawler. The underlying storage is a MongoDB cluster, distributed via Redis, and the crawler status is displayed using graphite. It mainly targets a novel website.

- CnkiSpider [7] - A CNKI (China National Knowledge Infrastructure) crawler. After setting search conditions, it executes

src/CnkiSpider.pyto scrape data, stored in the/datadirectory. The first line of each data file contains the field names. - LianJiaSpider [8] - A Lianjia crawler. It scrapes historical second-hand housing transaction records in Beijing. It includes all the code from the Lianjia simulated login article.

- scrapy_jingdong [9] - A JD.com crawler based on Scrapy. Data is saved in CSV format.

- QQ-Groups-Spider [10] - A QQ group crawler. It batch scrapes QQ group information, including group name, group number, member count, group owner, group description, etc., and generates XLS(X) / CSV result files.

- wooyun_public [11] - A WooYun crawler. It scrapes WooYun's public vulnerabilities and knowledge base. All public vulnerabilities are stored in MongoDB, taking up about 2GB. If the entire site, including text and images, is scraped for offline querying, it requires about 10GB of space and 2 hours (10M broadband). The knowledge base takes up about 500MB. Vuln search uses Flask as the web server and Bootstrap for the frontend.

- spider [12] - A hao123 website crawler. Starting from hao123, it scrolls to scrape external links, collects URLs, and records the number of internal and external links on each URL, along with the title. Tested on Windows 7 32-bit, it can collect about 100,000 URLs every 24 hours.

- findtrip [13] - A flight ticket crawler (Qunar and Ctrip). Findtrip is a Scrapy-based flight ticket crawler, currently integrating data from two major ticket websites in China (Qunar + Ctrip).

- 163spider [14] - A NetEase client content crawler based on requests, MySQLdb, and torndb.

- doubanspiders [15] - A collection of Douban crawlers for movies, books, groups, albums, and more.

- QQSpider [16] - A QQ Zone crawler, including logs, posts, personal information, etc. It can scrape 4 million pieces of data per day.

- baidu-music-spider [17] - A Baidu MP3 site crawler, using Redis for resumable scraping.

- tbcrawler [18] - A Taobao and Tmall crawler. It can scrape page information based on search keywords and item IDs, with data stored in MongoDB.

- stockholm [19] - A stock data (Shanghai and Shenzhen) crawler and stock selection strategy testing framework. It scrapes stock data for all stocks in the Shanghai and Shenzhen markets over a selected date range. It supports defining stock selection strategies using expressions and multi-threading. Data is saved in JSON and CSV files.

- BaiduyunSpider [20] - A Baidu Cloud crawler.

- Spider [21] - A social data crawler. It supports Weibo, Zhihu, and Douban.

- proxy pool [22] - A Python crawler proxy IP pool.

- music-163 [23] - A crawler for scraping comments on all songs from NetEase Cloud Music.

- jandan_spider [24] - A crawler for scraping images from Jiandan.

- CnblogsSpider [25] - A Cnblogs list page crawler.

- spider_smooc [26] - A crawler for scraping videos from MOOC.

- CnkiSpider [27] - A CNKI crawler.

- knowsecSpider2 [28] - A Knownsec crawler project.

- aiss-spider [29] - A crawler for scraping images from the Aiss app.

- SinaSpider [30] - A crawler that uses dynamic IPs to bypass Sina's anti-scraping mechanism for quick content scraping.

- csdn-spider [31] - A crawler for scraping blog articles from CSDN.

- ProxySpider [32] - A crawler for scraping and validating proxy IPs from Xici.

Update:

webspider [33] - This system is a job data crawler primarily using Python 3, Celery, and requests. It implements scheduled tasks, error retries, logging, and automatic cookie changes. It uses ECharts + Bootstrap for frontend pages to display the scraped data.