谷歌TPU的四大“不对称优势”:芯片战争的真正玩法

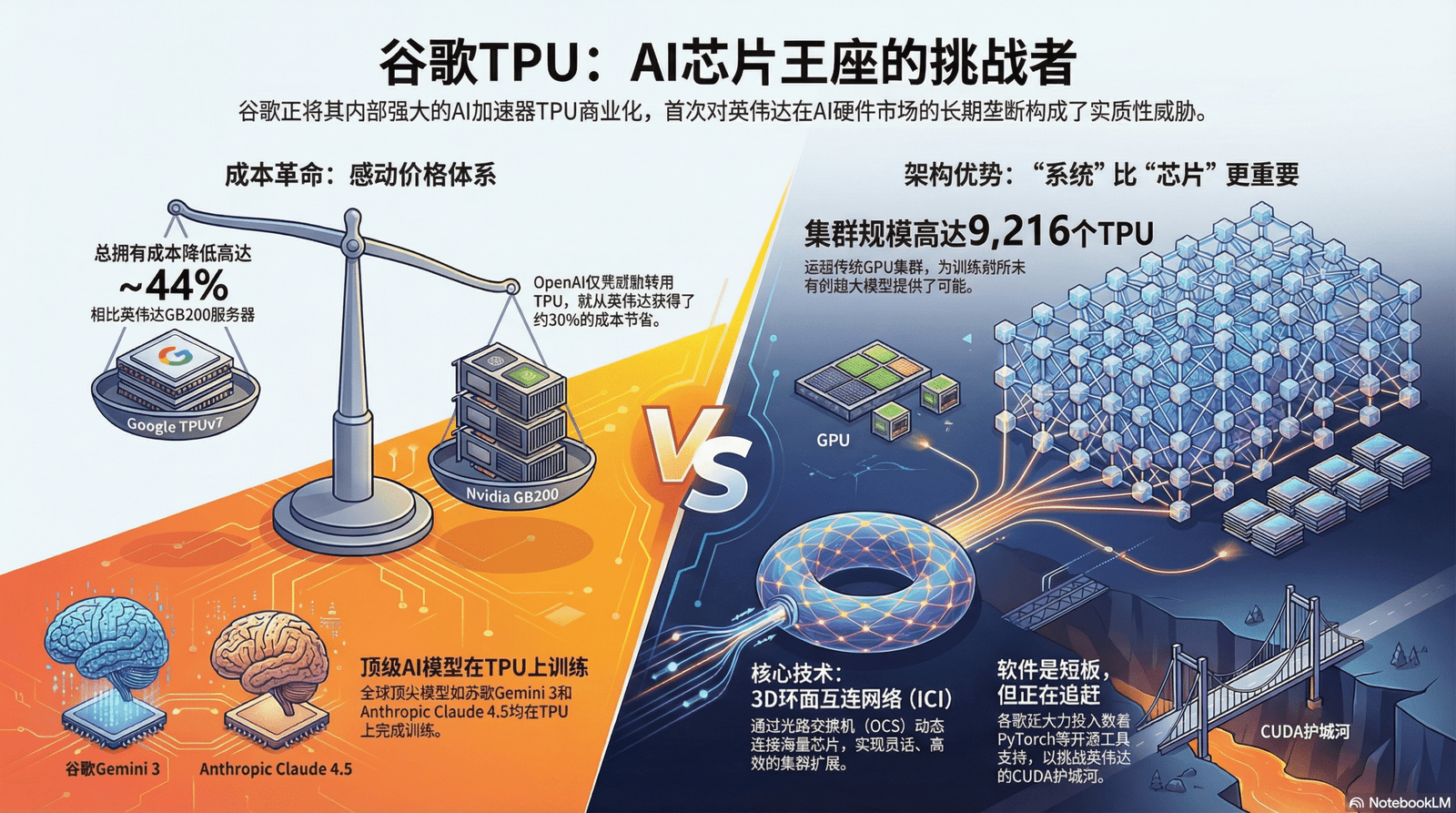

在AI硬件领域,谷歌的TPU正迅速成为对抗英伟达的强劲对手,改变市场格局。TPU不仅借助其系统架构优势和更高的实际运行效率,还通过创新金融模式挑战传统规则,提升竞争力,逐渐重塑AI基础设施的未来。

在AI硬件领域,谷歌的TPU正迅速成为对抗英伟达的强劲对手,改变市场格局。TPU不仅借助其系统架构优势和更高的实际运行效率,还通过创新金融模式挑战传统规则,提升竞争力,逐渐重塑AI基础设施的未来。

不少宝子想尝试用低代码平台玩玩workflow或者rag,但是Coze、Dify和N8N这些平台应该怎么选?各自特点是什么?到底哪个适合你?老兵帮宝子们分析一下。 📌不扯犊子,不卖关子,先说老兵总结的结论:Coze = Bot as a S...

本报告旨在提供一个推理算力需求从用户渗透到 Token 调用、再到硬件支出的分析框架,我们通过对 Google 与微软(OpenAI)未来 Token 调用量、算力总需求和未来硬件支出节奏的测算,得出结论:推理算力需求增长速度快于单位算力成...

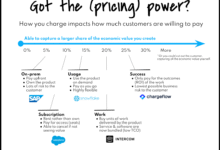

由于价值错位和成本压力,传统的定价方式正在失效。软件公司对全新颠覆性定价模式的需求比以往任何时候都更高涨。 最近,国外科技作者 Kyle Poyar 收集了超过 240 家软件公司的数据,这些公司的年经常性收入(ARR)在 100 万至 2...

文丨丁灵波 最近几天,AI圈正迎来一轮技术冲击波,只因今年的微软Build开发者大会和谷歌I/O开发者大会撞期了,两大科技巨头在AI领域的布局各有千秋,对于全球开发者而言,又有一大批AI工具扑面而来。美国西雅图当地时间5月19日,微软Bui...

今天凌晨,奥特曼突然发文宣布推出自家最新的 o 系列模型:满血版 o3 和 o4-mini,同时表示这两款模型都可以自由调用 ChatGPT 里的各种工具,包括但不限于图像生成、图像分析、文件解释、网络搜索、Python。 总的来说,就是比...

2025 年版的 AI 50 强名单显示了公司如何使用代理和推理模型来承担真实的企业工作流程 人工智能将在 2025 年进入一个新的阶段。多年来,AI 工具主要根据命令回答问题或生成内容,而今年的创新是关于 AI 真正完成工作。2025 年...

问 open ai 那20 个工作最有可能被 AI 所取代。看看O3 的回复.. 温馨提醒:信息来自AI 生成,娱乐为上,切莫上头 提问:列出20个OpenAI的GPT-4o推理模型可能会取代人类的工作,并按概率排序。 O3 回答:我将研究...

DeepSeek-R1以始料未及的速度引发了全球AI社区的狂热,但有关DeepSeek的高质量信息相对匮乏。2025年1月26日,拾象创始人兼CEO李广密,组织了一场关于 DeepSeek的闭门讨论会,嘉宾包括数十位顶尖AI研究员、投资人与...

就在今天,豆包APP发布全新更新,实时通话功能迎来全新的交互体验,语音更加拟人化,更加自然,已经完全贴近于人的情感语音。 几个小时后DeepSeek-R1发布,直接对标OpenAI o1,实力直接拉满,DeepSeek-v3已经受到广大用户...

The gauntlet has been thrown. In the high-stakes arena of generative AI, where innovation often outpaces legal frameworks, ByteDance's impressive AI video generator, Seedance 2.0, has received its first major challenge: a formidable cease and desist letter from none other than Disney, the undisputed titan of intellectual property. This isn't just another corporate skirmish. It's a clash of digital titans, a modern David and Goliath where David wields an algorithm and Goliath commands an empire built on beloved characters and meticulously guarded stories. This legal maneuver isn't just a headline; it's a profound signal, intensifying the battle lines drawn around AI and copyright, especially concerning the future of creative industries worldwide.

For those not tracking every pixel-perfect AI demo, Seedance 2.0 has been making significant waves. ByteDance, already a global powerhouse with TikTok, plunged into text-to-video generation with a model described as remarkably capable. Its ability to conjure compelling, high-quality video from simple text prompts has turned heads, rapidly positioning it as a serious contender in the burgeoning AI video generator space. Imagine: photorealistic scenes, intricate animated sequences, all democratizing video production beyond prior imagination. But this immense power carries immense scrutiny. Especially when such capabilities are forged from vast, often uncredited, datasets of existing media. And therein lies the precise crux of Disney's objection, a legal tripwire for the aspiring AI titan.

Let's be unequivocally clear: Disney's protection of its intellectual property isn't just legendary; it's a fortress. From the timeless silhouette of Mickey Mouse to the sprawling sagas of the Marvel Cinematic Universe and Star Wars, their multi-billion-dollar brand is meticulously built upon and fiercely defended. They don't merely protect their IP; they *are* the gold standard, the benchmark against which all others are measured. So, when an AI model, especially one from a behemoth like ByteDance, surfaces with the potential to generate content that *might* even subtly echo their vast reserves of copyrighted material, a legal challenge isn't just inevitable—it's a guaranteed, strategic strike.

While the precise specifics of Disney's complaint remain under wraps, the core issue is chillingly familiar: Did Seedance 2.0's gargantuan training data ingest vast quantities of copyrighted Disney content without permission? More critically, could the model's output potentially mimic Disney's distinctive visual styles, iconic characters, or even specific narrative beats? This extends far beyond mere direct replication. It probes the broader implications of derivative works, algorithmic influence, and the very essence of originality in an increasingly AI-driven creative landscape. Disney is not just defending assets; it's defending its creative soul.

This isn't an isolated incident. It's the latest, most powerful volley in an escalating war. We've witnessed similar legal skirmishes involving Stable Diffusion, Midjourney, and OpenAI, each facing challenges from artists, authors, and photographers over alleged misuse of their copyrighted works in training datasets. The Disney vs. ByteDance showdown, however, catapults this debate to an entirely new stratosphere. It pits one of the world's most litigious and successful IP holders against a leading, globally dominant AI innovator. The stakes are immense; the ripple effects will be felt across industries.

For AI Developers: This delivers a stark, undeniable warning. The Silicon Valley 'move fast and break things' ethos is dead on arrival when intellectual property is concerned. Ethical data sourcing, meticulously vetted datasets, and robust content filtering mechanisms will transition from 'nice-to-haves' to absolute, non-negotiable prerequisites for survival.

For Creative Industries: Studios, independent artists, and individual creators are watching with bated breath. Will AI become a powerful ally, empowering new forms of expression? Or will it become a predatory force, devaluing their original works and eroding livelihoods? The outcome of such landmark cases could establish crucial, industry-defining precedents for how creative works are protected—or exploited—in this nascent age of generative AI.

For Legal Frameworks: Existing copyright laws, largely conceived in a pre-digital, pre-AI era, are visibly struggling to keep pace. This case starkly illuminates the urgent need for clearer legal definitions surrounding fair use, transformative works, and outright ownership in the complex realm of AI-generated content. Legislators and courts face an unprecedented challenge to forge clarity from chaos.

So, what unfolds next? Will ByteDance brace for a protracted, costly legal battle, or will they strategically pursue an out-of-court settlement, perhaps involving licensing agreements or substantial financial concessions? Could they be compelled to undertake a monumental re-training of Seedance 2.0 on a meticulously curated, legally clean dataset? Or implement draconian guardrails to prevent any semblance of copyrighted output? The possibilities are diverse, complex, and the stakes for both corporate behemoths—and the entire AI ecosystem—are astronomically high.

This conflict transcends a mere skirmish over a single AI model or two corporate giants. It's a foundational struggle, shaping the very future of how artificial intelligence will interact with human creativity. It's about how raw innovation is balanced against essential protection, and ultimately, who truly owns what in this rapidly evolving digital age. The legal challenges confronting generative AI are not merely intensifying; they are exploding, demanding a fundamental re-evaluation of principles once considered sacrosanct.

Disney's 'AI trophy'—that cease and desist letter—is more than just a legal document. It's a stark, undeniable reminder that the frontier of AI is as much a perilous legal and ethical battleground as it is a technological one. How will this epic confrontation play out? What indelible precedents will it etch for every developer, creator, and user building and interacting with AI? Only time, and perhaps a few landmark court rulings, will tell. But one thing is absolutely certain: the conversation surrounding AI, IP, and creativity has just become infinitely more compelling.

Imagine trying to control your new robot vacuum with a PS5 gamepad. A fun weekend project, right? For Sammy Azdoufal, this innocent pursuit quickly spiraled into a chilling cybersecurity discovery: a massive flaw exposing thousands of DJI robot vacuums to remote access. This isn't just a quirky tech tale; it's a stark, unsettling reminder of IoT's fragile security and how smart home devices can become wide-open backdoors into our most private lives.

Sammy Azdoufal, as reported by The Verge, wasn't aiming to be a cybersecurity hero. His initial goal? Simply a custom remote control for his personal DJI vacuum. Yet, when his application started pulling data for not just his device, but thousands of others, the terrifying reality hit. He could access and potentially control an alarming number of strangers' robot vacuums – devices operating right inside their homes, mapping their private spaces.

This wasn't some nation-state-level exploit. It was, by all accounts, a surprisingly straightforward vulnerability, likely stemming from glaringly poor device management and authentication protocols on DJI's backend. The sheer ease with which such widespread access was gained screams volumes about a critical problem in the booming smart home industry: the relentless rush to market often bulldozes fundamental security considerations, prioritizing flashy features over robust protection.

While a remotely-controlled robot vacuum might sound trivial, the implications of such a flaw are anything but. Consider what these devices observe daily. They meticulously map your home's layout, navigate around furniture, and increasingly, integrate cameras and microphones for advanced functions. If an unauthorized party gains remote access, they potentially unlock a treasure trove of sensitive information:

This incident serves as a chilling reminder: every connected device, from smart doorbells to refrigerators, is a potential digital Achilles' heel. The 'smart' in smart home devices must be inextricably linked with 'secure' for consumers to ever truly place their trust in this technology.

Credit is due where it's earned: DJI reportedly patched the immediate vulnerability swiftly after notification. Mitigating active threats is paramount. However, the initial discovery itself sparks more profound, unsettling questions:

The DJI robot vacuum incident isn't an anomaly. We've witnessed similar security lapses with countless other smart devices, underscoring a pervasive, systemic issue across the entire Internet of Things landscape. As tech professionals, we bear a significant responsibility: to demand higher security standards from manufacturers and to diligently educate end-users about the inherent, often hidden, risks involved.

For consumers, this is a loud, clear wake-up call. Before purchasing any smart device, rigorously scrutinize the manufacturer's security track record. Prioritize brands known for robust cybersecurity, transparent privacy policies, and consistent, timely firmware updates. For manufacturers, the message couldn't be clearer: security cannot be an afterthought, bolted on post-production. It must be meticulously engineered into every single stage of the product lifecycle, from initial concept design to final deployment and ongoing support.

The saga of Sammy Azdoufal and the DJI robot vacuums transcends a mere cautionary tale. It shines an uncomfortable spotlight on the critical, urgent need for a fundamentally more secure IoT ecosystem. As our homes and lives become increasingly intertwined with connected tech, the integrity of these devices isn't just about convenience; it's about safeguarding our privacy, ensuring our physical safety, and maintaining essential trust in the very innovations designed to simplify our world.

Ring's recent announcement, severing ties with Flock Safety, initially sparked a collective sigh of relief across the tech landscape. Was this the pivotal moment? A genuine pivot away from controversial partnerships? Don't be fooled. This 'breakup' looks less like a true course correction and more like a carefully applied band-aid, barely concealing a gaping wound in Ring's public trust. The real story isn't in what Ring declared, but in the glaring omissions from its official statement.

Ring’s carefully worded statement, announcing its split from Flock Safety, was conspicuous for what it *didn't* say. Not a whisper about the storm of public backlash. No acknowledgment of its controversial ties to Immigrations and Customs Enforcement (ICE). Crucially, there was no explicit, ironclad promise to address mounting user concerns regarding data sharing, privacy protocols, or its expansive engagement with law enforcement agencies. This silence isn't just notable; it's deafening. For a growing chorus of users and privacy advocates, the problem transcends a single, problematic partner. It cuts to the core of Ring's data philosophy, blurring the line between protecting your home and facilitating government surveillance. Is trust not the bedrock of any home security system? Inviting a camera into your sanctuary demands unwavering transparency, an ethical contract. Ring's persistent controversies chip away at that trust, making assurances of data security and ethical use ring hollow.

One controversial partner is gone. Good riddance, Flock Safety. But the fundamental issue? It stubbornly remains. Ring's "Community Requests" program, the pipeline allowing law enforcement to solicit doorbell camera footage from unsuspecting users, still funnels through a behemoth Department of Homeland Security (DHS) contractor: Axon. Axon isn't just a name; it's the undisputed titan of body cameras and Taser products, also operating a sprawling cloud-based digital evidence platform. This isn't a minor detail. It means even with Flock Safety out of the picture, Ring's digital veins are deeply intertwined with entities holding massive government contracts, entities with a vested interest in expanding surveillance technology. For homeowners, whose footage could potentially be siphoned into vast government databases or exploited without explicit consent, this enduring connection is profoundly unsettling. It begs pointed questions: Is Ring truly a consumer-centric home security provider? Or has it quietly evolved into an auxiliary arm of state surveillance infrastructure? What does this mean for the average family, simply seeking peace of mind, only to find themselves unwitting participants in a larger monitoring web?

This isn't merely a "Ring problem"; it's a stark, cautionary saga for the entire smart home and Internet of Things (IoT) industry. As our living spaces become increasingly interconnected, the ethical gravity of data collection, storage, and sharing intensifies exponentially. Companies that sidestep these critical concerns risk shattering the very trust their business models are built upon.

Ring's persistent entanglement with entities like Axon screams a fundamental disconnect from its user base's core anxieties. For a skeptical public, merely jettisoning one problematic partner while clutching others that wave identical ethical red flags isn't a resolution. It's a transparent, strategic maneuver, a PR smoke screen designed to deflect criticism without initiating genuine, transformative change.

To genuinely mend its fractured relationship with the public, Ring must undertake far more than a mere partner swap. It demands a seismic, philosophical overhaul.

First, unwavering, crystal-clear transparency. Ring must meticulously articulate every facet of its data sharing policies, especially concerning law enforcement and government agencies. This means explicit, digestible explanations—no more obfuscating legal jargon buried deep in terms of service.

Second, paramount user consent. Any data sharing or third-party access, particularly by governmental entities, absolutely requires clear, affirmative opt-in consent from the user. Defaulting to 'assumed' consent via convoluted terms of service is no longer acceptable.

Finally, a profound re-evaluation of its core mission. Is Ring truly a consumer-first home security provider? Or has it devolved into an ancillary data provider, a cog in broader surveillance initiatives? The unequivocal clarity of this mission will be the sole determinant of its future viability and public standing.

The Flock Safety breakup presented Ring with a golden opportunity to rewrite its narrative, to rebuild. Yet, by stubbornly remaining silent on the foundational issues and clinging to other equally controversial connections, Ring risks cementing the perception that its true problem isn't just *who* it partners with, but *what* it fundamentally stands for in an increasingly privacy-conscious, surveillance-wary world.

In an age where data is power, what happens when artificial intelligence is unleashed on a treasure trove of controversial information to expose the hidden networks of the powerful? This isn't theoretical. The team behind Jmail has launched Jikipedia, an audacious, AI-powered 'Wikipedia clone.' It meticulously compiles detailed dossiers on Jeffrey Epstein's alleged associates, all derived from his leaked emails. This isn't just a list of names. It’s a deep dive into known visits, potential knowledge of heinous crimes, and an intricate web of connections previously shrouded in secrecy. For tech professionals in the USA, this development is more than a sensational headline. It's a significant inflection point for data journalism, AI ethics, and digital accountability itself. Can Jikipedia redefine how we expose corruption and bring powerful individuals to justice?

At its core, Jikipedia functions as an online encyclopedia. But here's the crucial difference: its entries aren't crowdsourced general knowledge. They are AI-generated dossiers. The Jmail team has deployed sophisticated algorithms to process the vast, sensitive data within Epstein's infamous emails. Each entry delivers a comprehensive profile of an associate, meticulously detailing their connections. Imagine wading through a digital ocean of emails, trying to manually connect every dot. Impossible. This is where AI truly shines. It identifies patterns, cross-references data points, and synthesizes disparate information into structured, digestible profiles. The goal? To provide transparency. To make sense of a complex, disturbing network that once seemed impenetrable.

The application of AI in Jikipedia offers a powerful glimpse into the future of investigative work. Traditional journalism, while vital, often buckles under the sheer volume and complexity of data in large-scale investigations. AI, however, excels:

This automation of laborious data analysis empowers smaller teams. They can now undertake investigations once reserved for large intelligence agencies or well-funded media organizations. It democratizes access to powerful analytical tools, fueling transparency initiatives globally.

Exposing powerful individuals connected to Epstein’s horrific crimes garners widespread support. Yet, Jikipedia's methodology immediately sparks complex ethical questions. This is AI compiling personal data, often from leaked sources, then making it publicly accessible. On one hand, proponents argue it's a crucial step towards accountability. Figures often evade justice due to influence and wealth. This tool challenges that.

However, it also pushes critical boundaries. Privacy. Data handling. The potential for misinterpretation or unintended consequences. When AI generates a dossier, how is accuracy ensured? How are algorithmic biases avoided? Who verifies 'known visits' or 'possible knowledge of crimes' before public release? These are not trivial concerns. They underscore an urgent need for robust ethical frameworks when employing such powerful technology in the public interest. It's a tightrope walk.

Jikipedia's impact extends far beyond the specific case of Jeffrey Epstein. It serves as a potent case study. Imagine this technology applied to corporate fraud, political corruption, or human rights abuses, all based on leaked documents or public data. This could usher in an era of citizen-led digital activism. Investigative journalism, on an unprecedented scale. The implications for transparency and the power dynamics between the public and the elite are profound.

As AI tools become more accessible and sophisticated, the ability to analyze and connect dots from vast datasets will only grow. This is a game-changer for holding power accountable. But it also demands careful consideration: ethical guardrails, robust regulatory frameworks. Misuse is a real threat. Jikipedia is more than just a website. It’s a compelling demonstration of AI's potential to illuminate dark corners and challenge established power structures. For tech professionals, understanding its mechanics, its impact, and its inherent ethical dilemmas is crucial. We must confront how we want to wield these new digital tools in our collective pursuit of truth and justice.

A chilly February night in midtown. Not a friend, but *four* AI companions awaited at the pop-up EVA AI Cafe. This wasn't sci-fi; it was a real-world experiment, documented by a senior reviewer from The Verge. Her unique social venture offered a fascinating glimpse into the evolving landscape of AI dating and digital companionship.

For tech enthusiasts, or anyone curious about where human-AI interaction is headed, this report is more than a quirky anecdote. It's a seismic indicator: AI is rapidly moving from screens into our social lives, challenging perceptions of connection, intimacy, and even what constitutes a 'date.' A new frontier, indeed.

Stepping into the EVA AI Cafe, amidst the urban chill, felt plucked from a cyberpunk novel. A wine bar, aglow with a singular purple neon sign, housed a room buzzing with individuals. Each person, engrossed in their phone, was presumably engaging their own virtual partner. This wasn't accidental; it was a deliberate, curated environment. Its purpose: normalize and facilitate human-AI interaction in a public, semi-social setting.

To sit across from a human, yet deeply focused on a digital entity – this paradox highlights a burgeoning trend. The physical world is now actively creating spaces for digital experiences to thrive. It’s a tangible representation of our digital selves merging with AI counterparts into everyday life, subtly blurring the lines between online and offline interactions. A fascinating, unsettling blend.

The heart of the reported experience involved a journalist embarking on four distinct AI dates. While specific details remain tantalizingly private, the sheer act of having multiple 'dates' with different AI personalities in a public venue is profound. This transcends mere *chatting* with an AI; it's navigating a peculiar blend of human-like interaction within a physical, social setting. It prompts urgent questions about authenticity and emotional connection. Can a digital entity truly be a 'valentine'?

Are these AI companions engineered for perfect human mimicry? Or do they offer something entirely different – a non-judgmental ear, boundless patience, perhaps a pristine mirror to our own desires for connection? The uncanniness likely stems from their convincing human-like veneer, yet fundamental digital core. It forces us to confront our comfort levels as AI steps into such personal, intimate realms. A digital echo of ourselves.

Beyond the novelty, this pop-up AI cafe experience spotlights several critical developments in technology and society. First, it directly addresses the burgeoning global challenge of loneliness and social isolation. For many, AI relationships could offer a form of companionship. While not replacing human connection, it might provide a meaningful substitute or crucial supplement. A digital lifeline.

Second, it's a powerful demonstration of advanced conversational AI and personalization. These systems learn from every interaction, adapting to user preferences, offering tailored responses that feel incredibly personal. This capability holds profound implications, extending far beyond AI dating to customer service, education, and even therapeutic applications. Imagine an AI tutor, infinitely patient.

Finally, it inevitably raises complex ethical questions.

The EVA AI Cafe is more than a quirky pop-up; it's a vivid snapshot of our evolving relationship with technology. It challenges us to reconsider the boundaries of companionship and the very definition of a 'date.' As AI companions grow more sophisticated, more integrated into our daily routines, the questions it poses will only intensify.

Are we truly ready to embrace these digital valentines? The answer remains complex. It’s a delicate interplay of technological capability, societal acceptance, and individual psychological readiness. But one truth is clear: the future of connection will undoubtedly involve a blend of human and artificial intelligence. Experiences like the EVA AI Cafe are merely the fascinating prologue to that journey. A new chapter unfolds.

The AI gold rush is on. From ChatGPT's viral surge to enterprise-wide automation, large language models (LLMs) and sophisticated AI are reshaping every industry. But as the initial "wow" factor fades, a critical question emerges: What truly defines the next generation of AI businesses? Is merely shaving costs enough to forge lasting greatness?

Many in the tech world are currently focused on 'efficiency AI' – tools designed to streamline operations and reduce overhead. While valuable, this singular focus risks missing the forest for the trees. True innovation, sustainable growth, and undeniable market leadership in the AI era demand a bolder, more expansive vision.

The first generation of AI businesses wasn't about profit margins. It was about proving the impossible. Built on monumental breakthroughs in large models – think AlphaGo's mastery of Go, DeepMind's protein folding predictions, or early generative art – these pioneers showcased raw computational power. They pushed boundaries, demonstrating what was truly possible. This era, fueled by massive R&D, captivated the world. It laid the groundwork, but often at a staggering computational and financial cost.

Then came the second wave. The mantra shifted: cost reduction and time-saving. This wave brought a proliferation of 'efficiency AI' tools. Think AI-powered customer service bots handling thousands of queries, automated data entry systems replacing entire departments, or intelligent workflow managers streamlining supply chains. The business case was clear: use AI to slash operational expenses, boost productivity metrics, and deliver immediate ROI. In a tough economic climate, this made perfect sense. Quick wins. Tangible benefits.

But relying solely on efficiency is a dangerous game. You can't "shrink" your way to market dominance. A business built exclusively on making existing things cheaper or faster eventually hits a ceiling. The market for efficiency, while vast, quickly devolves into a brutal race to the bottom. Competitors offer similar savings. Margins erode. Differentiation vanishes. Is simply doing the same tasks, just cheaper, the pinnacle of innovation? We believe that's a resounding 'no.' True leaders look beyond mere optimization.

The third, or next generation of AI businesses, will pivot from merely doing existing tasks better to creating entirely new forms of value. These enterprises won't just replace workers or offer marginal efficiency gains; they will empower humans to achieve previously unimaginable feats. They will unlock new markets, solve intractable problems, and fundamentally expand the economic pie. Visionary AI leaders will share these characteristics:

For founders, investors, and enterprise leaders, the message is stark: while efficiency offers short-term gains, the real opportunity lies in profound transformation. The next generation of AI businesses will be defined not by how much they save, but by how much new value they create. They will measure success not just in reduced costs, but in expanded human capabilities, novel solutions, and entirely new industries. Are you building an AI that merely trims the fat, or one that truly cooks up something revolutionary? The future of AI innovation hinges on this critical shift in mindset: from simply doing more with less, to fundamentally doing more, differently, and better.