February 20th isn’t just another date on the calendar; it’s a digital reckoning. India’s stringent new mandates on AI-generated content are poised to crash over social media giants like Instagram and X, threatening to fundamentally reshape how they manage digital information. At its core? A monumental, near-impossible challenge: detect, label, and rapidly remove deepfakes and other manipulated media at an unprecedented scale and speed. This isn’t mere compliance; it’s a seismic shift, a litmus test for the future of online trust and the technical capabilities of our biggest platforms.

The Impending Deadline: India’s Uncompromising Stance on Deepfakes

India, a digital powerhouse with hundreds of millions of internet users, has drawn a definitive line in the sand. As of February 20th, social media platforms operating within its borders face a dual, non-negotiable imperative:

- Rapid Removal: Illegal AI-generated materials must vanish at lightning speed. The definition of “illegal” here casts a wide net, from explicit deepfakes to sophisticated misinformation designed to incite public unrest.

- Universal Labelling: Perhaps the most challenging directive, every single piece of AI-generated or manipulated content—regardless of its legality or intent—must be explicitly labelled. This isn’t just for malicious deepfakes; it includes benign AI art, automatically generated captions, and even subtle photo enhancements.

This isn’t a suggestion; it’s a mandate. And the deadline is incredibly tight. For companies built on user-generated content, where billions of pieces of media are uploaded daily, this accelerated timeline for sophisticated content moderation is not just challenging; it’s a digital Everest.

The Everest of Content Moderation: Why Deepfake Detection is So Hard

Why is this deadline being called “impossible” by some? Because detecting deepfakes and other forms of AI-generated content isn’t like finding a needle in a haystack; it’s like trying to catch smoke in a constantly evolving, ever-shifting digital storm. Here’s why this is an unparalleled technical and operational challenge:

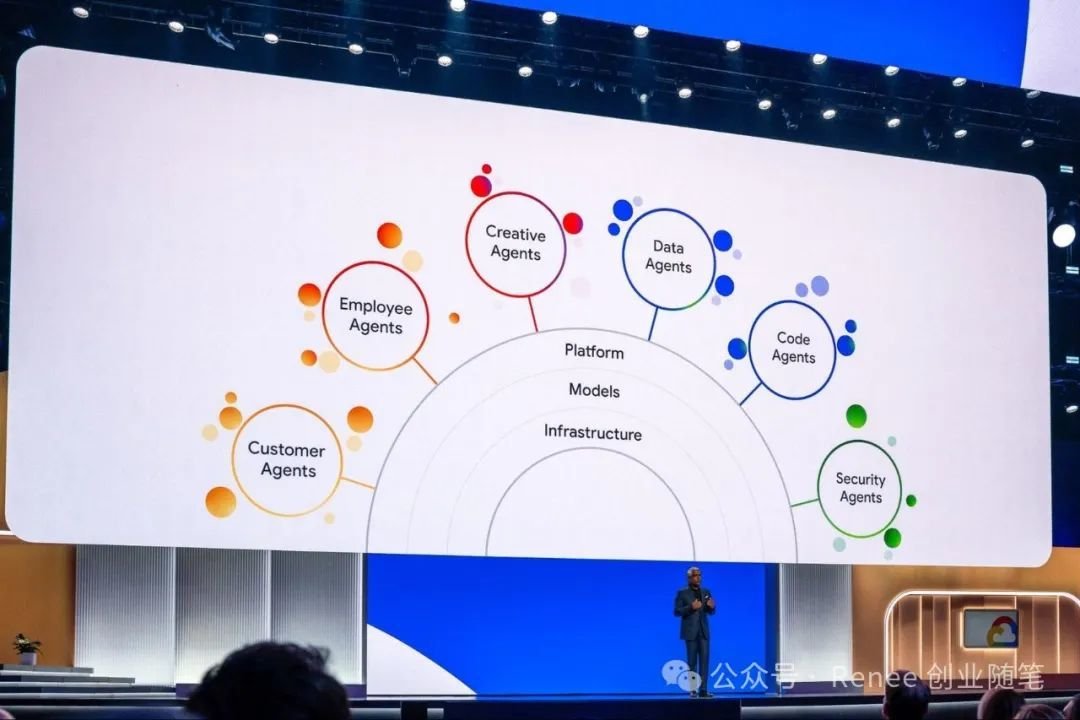

- The Evolving AI Arms Race: Deepfake technology advances daily. What was detectable last year is photorealistic today. AI models are constantly improving, making generated content more realistic and harder to spot, even for other AIs. It’s a relentless cat-and-mouse game.

- Scale and Speed: Imagine the sheer volume: billions of images, videos, and audio uploaded to Instagram and X every second. Each piece would theoretically need to be scanned, analyzed by advanced AI, and then, if applicable, accurately labelled—all in near real-time. The processing power and sophisticated algorithms required are staggering.

- Subtlety and Nuance: Not all AI manipulation is egregious. How do platforms differentiate between a harmless filter, a comedic parody, an art piece, and a malicious deepfake designed to spread misinformation or defame? The contextual understanding required goes far beyond simple pattern recognition, often oversimplifying human intent.

- Ethical Minefield: Who decides what constitutes “manipulated” content? Does a light photo edit count? What about an AI tool that cleans up background noise in an audio recording? The definition itself is fraught with potential for over-moderation or censorship, creating a slippery slope.

- Language and Cultural Labyrinth: India is a country of immense linguistic and cultural diversity. Detecting nuanced manipulation or miscontextualized content requires models trained on a vast array of local contexts, dialects, and social norms—a significant, often overlooked, development hurdle.

This isn’t just about having good AI detectors; it’s about having *perfect* AI detectors that can scale globally, instantly, and with absolute accuracy—a capability that currently exists only in science fiction.

Beyond Borders: Global Implications of India’s Mandate

While this mandate originates in India, its reverberations will be felt globally. What happens on February 20th, and in the weeks that follow, will set a powerful precedent:

- Regulatory Domino Effect: Other nations, like those in the EU and the US, grappling with the rise of deepfakes and AI misinformation, will be watching closely. If India’s aggressive approach proves effective (or even partially effective), it could become a blueprint, emboldening regulators worldwide to enact similar, equally demanding laws.

- Investment in AI Safety Arms Race: This mandate will force platforms to pour even more resources—potentially billions—into their AI detection and content moderation capabilities. This could accelerate innovation in AI safety, but also significantly increase operational costs for tech companies.

- The Future of User-Generated Content: Will platforms become more risk-averse about what content they allow? Could this lead to more automated rejections or delays in posting, impacting creators and everyday users? The delicate balance between freedom of expression and digital safety is razor-thin.

- Trust Reimagined: Ultimately, the goal is to rebuild trust in online content. If platforms can effectively label AI-generated content, it could empower users to make more informed judgments about what they consume. But the risk of failure is high, potentially eroding trust further if systems are inconsistent or biased.

What’s Next? A Battle for the Soul of the Internet

The February 20th deadline is here. For Instagram, X, and others, it’s a sprint against time, cutting-edge technology, and ever-evolving digital threats. This isn’t just about removing illegal content; it’s a fundamental redefinition of how digital authenticity is managed online.

Will platforms be able to meet India’s challenge, or will they face severe penalties and a crisis of credibility? The coming weeks will unveil much about the true capabilities—and stark limitations—of our current AI and content moderation systems. The battle for the internet’s soul has just been dramatically escalated, and the entire tech world is watching.